In this article we'll introduce key concepts of recommendation systems with TensorFlow, including what they are and how to build one.

Recommendation systems are one of the most successful and widely used business applications of machine learning. From news articles, videos on YouTube, movies on Netflix, and products on Amazon—recommendation systems play a large role of our everyday lives.

This article is based on notes from the final course in the Advanced Machine Learning with TensorFlow on GCP Specialization and is organized as follows:

- What are Recommendation Systems?

- Overview of Content-based Recommendation Systems

- Overview of Collaborative Filtering

- Neural Networks for Recommendation Systems

- Recommendation System Pitfalls

- Building a Simple Vector-Based Model for Content Recommendations

- Neural Networks for Content-Based Recommendation Systems

- Building a Collaborative Filtering System

Stay up to date with AI

What are Recommendation Systems?

Also referred to as recommendation engines, a recommendation system involves both a data pipeline to collect input data and a machine learning algorithm to make recommendation predictions.

Recommendation systems aren't always just about recommending content or products to users, they can also involve recommending users for products, for example in the case of recommending ads to users, which is known as targeting.

Others example of recommendation systems includes Google Maps recommending a route to avoid traffic or Gmail recommending a smart reply to an email.

Recommendation systems are all about personalization.

Overview of Content-Based Recommendation Systems

If you want to recommend content to users, for example movies, there are several ways to go about this. First, you can use a content-based recommendation system, which uses the metadata about the content. For example, a movie will have metadata about the genre, cast, and so on.

There is also user that has watched and rated a few movies and need to recommend them a new one from the database. At the most basic level, we can take the genre of movie the user has watched or liked before and recommend more of those.

This wouldn't need any machine learning and would be a simple rule-based engine that assigns tags to movies and users.

As we'll discuss bellow, content-based filtering uses item-factors to recommend new items that are similar to what the user has previously liked, either explicitly or implicitly.

Overview of Collaborative Filtering

In the case of collaborative filtering, you don't have any metadata about the products or content. Instead, the system learns about item and user similarity from the interaction data.

We can store the the data in a matrix that ties the interaction between a user and the item based on how they rated it. This matrix, however, would get very large, very fast.

If you have millions of users and millions of movies in the database, users will have only watched a handful of the movies. This means the matrix is both very large, but also very sparse.

The idea of collaborative filtering is that the large, sparse user by item matrix can be approximated by the product of two smaller matrices called user factors and item factors.

The factorization splits the matrix into row factors and column factors that are essentially user and item embeddings.

In order to determine if a user will like a movie, all you need to do is take the row corresponding with the user and the column corresponding to the movie and multiply them to get the predicted rating.

Neural Networks for Recommendation Systems

One of the main benefits of collaborative filtering is that you don't need to know any metadata about the item and you don't need to do any market segmentation of users.

As long as there is an interaction matrix you can use that to make predictive recommendations.

If you have both metadata and an interaction matrix you can use a neural networks to create a hybrid content-based, collaborative filtering, and knowledge-based recommendations system. We'll discuss knowledge-based systems in another article.

Real-world recommendation systems are typically a hybrid of these three approaches and are combined into a machine learning pipeline. This production-level ML pipeline will facilitate the continuous re-training of the recommendation system as new ratings data comes in.

Recommendation System Pitfalls

There are a few common difficulties you may encounter in building a recommendation engine, these include:

- Sparse data: Many items in a matrix will have no or few user ratings.

- Skewed data: The matrix may also be skewed, as certain properties are much more popular than others.

- Cold start problem: This occurs when there aren't enough interactions for users or items.

- Explicit data is rare: Often users will not provide an explicit rating of an item, in which case the system will need to use implicit factors such as the number of clicks, video watch time, and so on.

With enough data, we can train a neural network to provide an explicit rating based on implicit data.

Building a Simple Vector-Based Model for Content Recommendations

In this section, we'll look at how content-based recommendation engines work in more detail.

Content-based filtering uses item features to recommend new items that are similar to what the user has liked in the past.

For example, if a user has rated a selection of movies with either a like or a dislike, we want to determine which movie to recommend next. Given a list of all movies in the corpus, if a movie is similar to another that the user ranked highly, that one will be recommended next.

Below we'll look at how to measure the similarity of elements in an embedding space and the mechanics of content-based recommendation systems.

Measuring Similarity

In the movie example, one way to measure similar is to consider the various genres or themes in the movie. We can also measure how similar two users are in the same way, or by looking at movies they've liked in the past.

For a machine learning model, we want to compare movies and users, so we need to define the notion of similarity more rigorously.

This is typically done by putting properties or features of items and users in the same embedding space and comparing how close they are two one another.

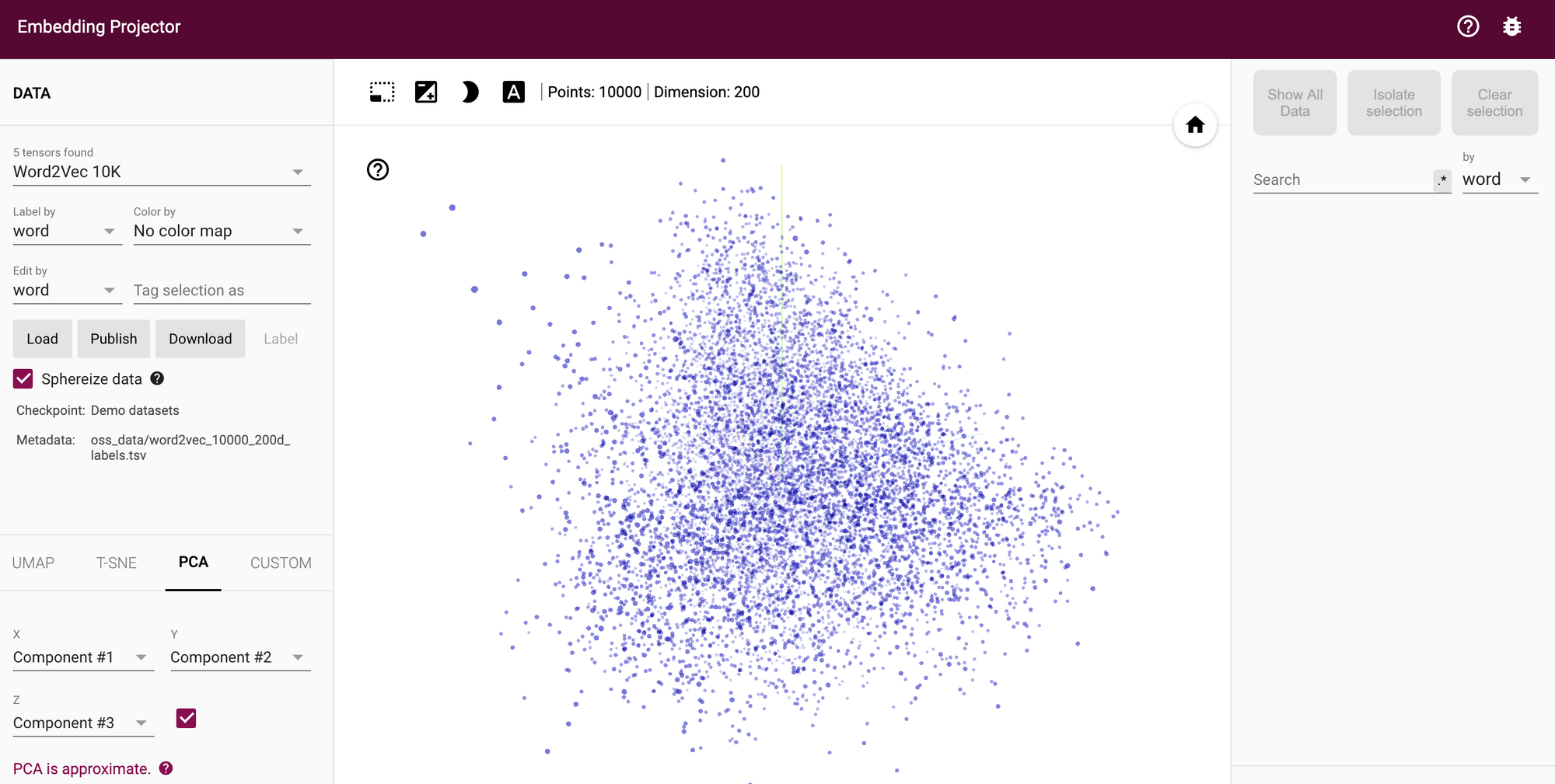

An embedding is a map from our collection of items to some finite dimensional vector space.

This provides a way to have a finite vector value for the representation of items and users in the dataset. Embeddings are commonly used to represent input features in machine learning.

One way to visualize embeddings is with TensorFlow's embedding projector, which allows you to visualize high-dimensional data:

A similarity measure is a metric for items in an embedding space.

A commonly used similarity measure is the dot product, which can computed for two vectors as follows:

$$s (a\overrightarrow, b\overrightarrow) = \sum_i a_i, b_i$$

The cosine similarity is another popular similarity metric as is computed as follows:

$$ s (a\overrightarrow, b\overrightarrow) = \frac{\sum_i a_i b_i}{|a\overrightarrow| |b\overrightarrow|}$$

User Vectors

If, for example, we consider a single user and only seven movies in the dataset. The user has rated three of the movies and we want to figure out which of the four remaining to recommend.

We'll also assume a 1-10 rating scale and each of the movies has predetermined features within five genres. Given the previous movie ratings, we can describe the user in terms of the same features or genres we use to define movies.

To do this, we scale each feature by the user's rating and normalize the resulting vector. This is referred to as the user feature vector.

This vector gives us an idea of where they fit within the embedding space of features. This gives us a five-dimensional vector in the feature space embedding, and the user feature vector is the normalization of that vector.

Making Recommendations with a User Vector

We can now use the user feature vector and the features of unseen movies to make recommendations.

To do so, we can use the dot product to measure the similarity between the use and the unseen movie.

The dot product is computed by taking the component-wise product across each dimension and summing the results. This means we multiply each movie feature vector component-wise with the user feature vector and add row-wise.

Making Recommendations with Multiple Users

Now that we've reviewed content recommendations for a single user, we need to scale the technique for multiple users.

With multiple users, each row of the user-item matrix will correspond to a user and each column corresponds to a movie.

We also still have an item-feature matrix for the movies and genres. The item-feature matrix is a k-hot encoding of the features used to describe movies.

In TensorFlow, we can initialize the constants by creating constant tensor values for the movies and features with tf.constant.

Once we have the collection of matrices, we can stack them together using tf.stack for a complete weighted user feature tensor.

We won't cover the complete code for this content-based recommendation system in this article, although you can find it in Week 1 of this course on Recommendation Systems with TensorFlow on GCP.

Neural Networks for Content-Based Recommendation Systems

Instead of the tensor or matrix operation approach to content-based recommendation systems discussed above, we can also use a neural network—particularly a supervised machine learning algorithm.

Given a user's features and a movie's features, we can predict the rating that a user might give. From a classification perspective, we can also try and predict which movie the user will want to watch next.

$$f (user features, movie features) \xrightarrow{?} rating$$

$$f (user features, movie features) \xrightarrow{?} movie id$$

For the user features, we can use things like age, location, language, gender, and so on. For movie features we can include genre, duration, director, actors, and so on.

Building a Collaborative Filtering System

Now that we've reviewed how content-based recommendation systems work, let's look at how collaborative filtering systems work in more detail. To do so, we'll discuss how to use user-item interaction data and find similarities.

One of the drawbacks with content-based recommendation systems is that it only makes predictions based on known features in the local embedding space.

If we don't know the best factors to compare item similarity with, collaborative filtering can be a better option for the following reason:

Collaborative filtering learns latent factors and can explore outside the user's personal bubble.

Collaborative filtering solves two problems at once—it uses similarities between items and users simultaneously in an embedding space.

To get around the sparse matrix issue discussed above, collaborative filtering uses matrix factorization.

Collaborative filtering also uses explicit—i.e. ratings—and implicit user feedback, such as how they interacted with the item.

Embedding Users & Items for Collaborative Filtering

As mentioned, content-based systems only use embedding spaces for items, whereas collaborative filtering uses both users and items.

We can either choose the number of dimensions using predefined features or we use latent features that are learned from data—more on this below.

In order to find the interaction value between users and items, we can simply take the dot product between each user-item pair in the matrix.

Users and items can be represented as d-dimensional points within an embedding space.

From there, embeddings can be learned from data.

In this case, we're compressing data to find the best generalities to use, which are referred to as latent factors.

Instead of defining features and assigning values along a coordinate system, we use the user-item interaction data to learn the latent factors that best factorize the interaction matrix into user factor embedding and an item factor embedding.

Factorization splits the very large user-item interaction matrix into two smaller matrices of rows factors for users and column factors for items.

We can think of the two factor matrices as essentially user and item embeddings.

Factorization Techniques

We've discussed one factorization technique for collaborative filter: matrix factorization, which works as follows:

- We factorize the user-interaction matrix into user factors and item factors

- Given a user ID, we multiply by item factors to predict the rating of all items

- We return the top $k$ rated items to the user

$$A \approx U x V^T$$

where:

- $A$ is the user-item interaction matrix

- $U$ is the user-factor matrix

- $V^T$ is the item-factor matrix

Since this is an approximation, we want to minimize the squared-error between the original matrix and the product of the two-factor matrices.

This resembles least-squares problem, which can be solved in several ways such as:

- Stochastic gradient descent (SGD)

- Singular value decomposition (SVD)

- Alternating least squares (ALS)

- Weight alternating least squares (WALS)

You can learn more about how each of these options compares in this Google Cloud tutorial. You can also find an implementation of the WALS algorithm in TensorFlow here.

Summary: Recommendation Systems

Recommendation systems are one of the most widely used applications of machine learning in our everyday lives.

Recommendation systems involve both the use of a data pipeline to collect and store data, as well as machine learning model to make recommendation predictions.

There are three main categories of recommendation systems: content-based systems, collaborative filtering, and knowledge-based systems. Production-level recommendation systems will typically use all three methods in an end-to-end machine learning pipeline.