In this guide, we'll discuss key concepts and use cases of the most popular large language model (LLM) right now: GPT-3.

In particular, we'll discuss:

- What is GPT-3?

- Use cases of GPT-3

- How GPT-3 works under-the-hood

- The benefits & limitations of using GPT-3

- Overview of the GPT-3 models you can use

- How to use the GPT-3 API

- Fine-tuning GPT-3 for your application

Stay up to date with AI

What is GPT-3?

GPT-3 stands for Generative Pre-trained Transformer 3 and is a large language model (LLM) capable of performing a number of text-related tasks such as machine translation, question answering, and text generation.

GPT-3 has been making waves in the AI community since its release in late 2019, and more recently in the mainstream media with ChatGPT which uses GPT 3.5 crossing 1 million users in just 5 days...

ChatGPT launched on wednesday. today it crossed 1 million users!

— Sam Altman (@sama) December 5, 2022

Also referred to as a natural language understanding (NLU) model, it's ability to generate human-like text has captured the attention of the media and seems to be the only thing Twitter has been talking about lately.

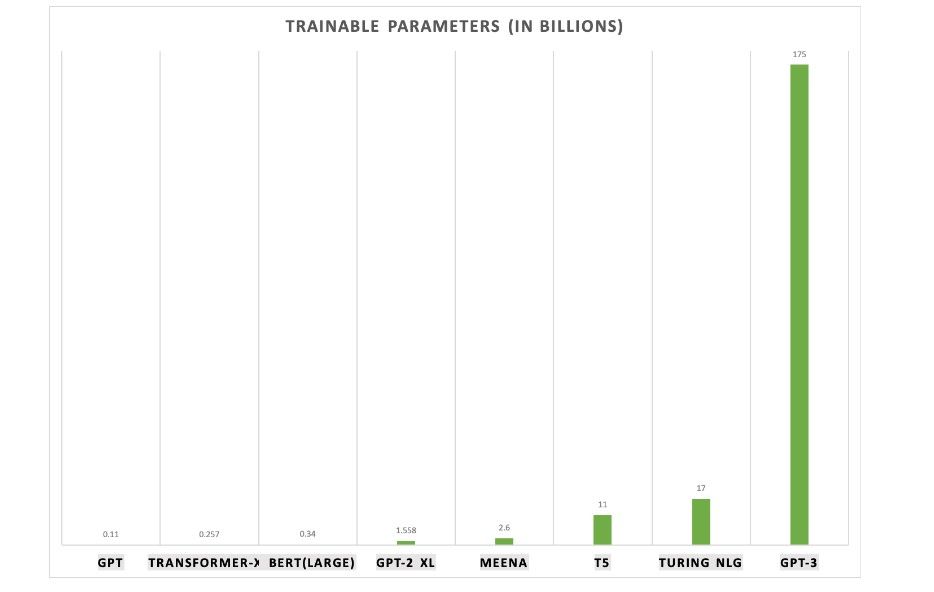

GPT-3 achieves this remarkable performance as it was trained on a dataset of hundreds of billion words, or about 45 TB text data, and it is said to be the largest neural network of its kind.

You can find the original GPT-3 paper here.

Use cases of GPT-3

With it's open API and ability to be fine-tuned to your specific use case, the applications of GPT-3 are nearly limitless. Here are a few of the most common use cases so far:

Content Creation

As the most obvious application, GPT-3's ability to generate text that is nearly indistinguishable from human-written content makes it an excellent co-writing assistant. With tools like Jasper.ai and Copy.ai, many marketers are now incorporating GPT-3 into their creative process for to combat writers block, create outlines, and much more.

Machine Translation

Another common use case of GPT-3 is for machine translation as it'salready being integrated into popular translation apps like DeepL. With GPT-3, DeepL is able to translate text from English to any other language with significantly higher accuracy and fluency than ever before.

Question Answering

GPT-3 can be used to answer questions, making it a valuable tool for research, customer service, and many other fact-checking applications. For example, GPT-3 could be used to create a question-answering bot that can generate detailed answers to questions about topics like history, or a company could use it for it's own knowledge base.

How GPT-3 works under the hood

GPT-3 uses a deep learning architecture known as a transformer.

Transformers were first introduced in the paper "Attention is all you need" written by members of the Google Brain team, and released in 2017.

The transformer architecture is based on the idea of self-attention, which enables the model to focus and prioritze specific parts of the input text while ignoring others.

This makes transformers significantly more efficient than previous language architectures as it gives GPT-3 the ability to learn from a much larger amount of data.

Many of the previous language model architectures relied on recurrent neural networks (RNNs), which were sequential models meaning they read the input text one word at a time. As you can imagine, this is an inefficient process as the RNN has to read the entire input text before it can start understanding it.

In the transformer architecture, on the other hand, each item in the input sequence is represented by a vector, which is then transformed by a sequence of matrix operations. These vectors are then passed through several "attention heads", which over time learn to focus on certain key parts of the input sequence.

The attention heads are then combined to produce a final vector, which is transformed into the output sequence. This process is repeated over and over again for every item in the input sequence.

The benefits & limitations of GPT-3

GPT-3 has several advantages over previous LLM models, such as

- It is much larger, which gives it more "learned" knowledge (i.e. it's basically "learned" the entire internet)

- It is available through an API, which makes it accessible for third-party applications

- It can be "fine-tuned" for specific applications and use cases such as legal text, medical records, or financial documents

GPT-3 also has several important limitations to be aware of and consider:

- Incorrect answers: Its responses are far from perfect, and can sometimes generate incorrect answers—StackOverflow recently banned responses from ChatGPT for this very reason

- Bias: Since it was trained on such a vast amount of text, GPT-3 is also susceptible to bias in its responses

- Interpretability: Also, given the nature of its neural network architecture, it can be difficult if not impossible to interpret why the LLM arrived at a particular response

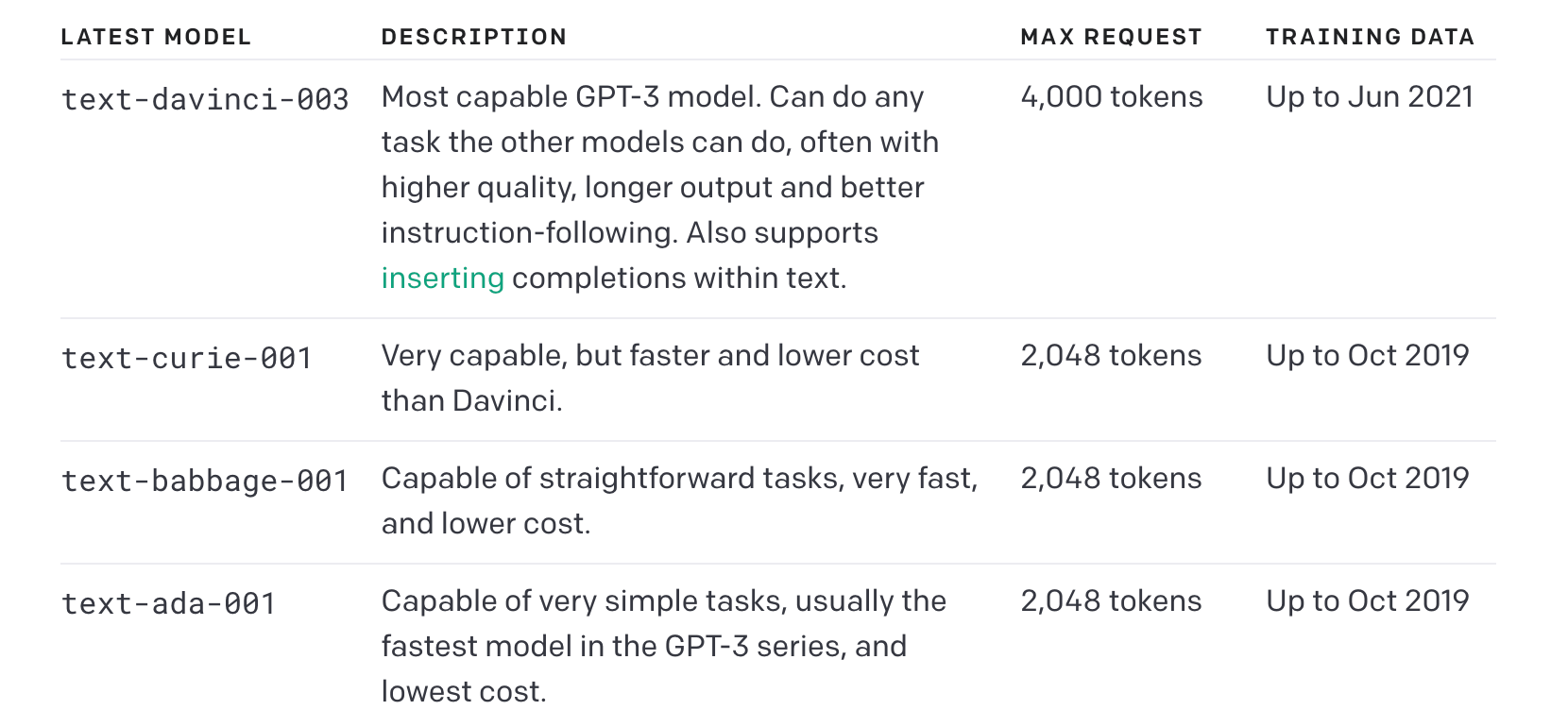

GPT-3 Models You Can Use

GPT-3 is available in four different sizes, each with a different use case, cost, and speed. As OpenAI highlights:

Davinci is the most capable model, and Ada is the fastest.

- text-davinci-003

- text-curie-001

- text-babbage-001

- text-ada-001

How to use GPT-3

GPT-3 can be accessed through its API, which allows you to build AI-based applications on top of the language model.

More recently., GPT 3.5 can also be accessed with the aforementioned ChatGPT interface.

To make use to the GPT-3 API, you just need to create an API key here. Once a key has been created, you can then use it to specify the model, size, and type of task you want it to perform.

The API also allows you to "fine-tune", also referred to as training, each model for your specific applications. For example, a lawyer may fine-tune GPT-3 to answer legal-related questions, or a doctor could train it to perform medical diagnoses, and so on.

Fine-tuning GPT-3 for your application

As mentioned, developers can also fine-tune GPT-3 using their own dataset in order to design a custom version specifically for their application.

This allows for more efficient use of the GPT-3 model and can improve results for domain-specific tasks.

Fine-tuning GPT-3 will undoubtedly be a massive business in the coming years as its ability to increase efficiency and lower costs in the workplace is growing exponentially.

We'll discuss exactly how to fine-tune GPT-3 in a future article, but for now you can find the docs below:

Check out this interview with the CEO of OpenAI to learn more about its applications, limitations, and the future of GPT-3:

Summary: What is GPT-3?

As we've discussed GPT-3 is a large language model (LLM) capable of performing a number of text-related tasks such as generating code, machine translation, content creation, and so much more.

Aside from the base model's remarkable capabilities, with the ability to fine-tune it to your own specific use case, many are calling ChatGPT (and more broadly GPT-3) the "iPhone" moment for AI:

Could ChatGPT be AI’s iPhone moment? https://t.co/2XkKOEaZD0 pic.twitter.com/22Cb1zXndZ

— Bloomberg (@business) December 12, 2022

In other words, the number of businesses and apps built on top of GPT-3 over the next decade will be remarkable to watch...or take part in if you're prepared.