In this article we'll discuss a subset of quantitative modeling: regression models.

In particular, we'll discuss what a regression is, common use cases of regression models, interpreting the output of regression models, and more.

This article is based on notes from Week 4 of this course on Fundamentals of Quantitative Modeling and is organized as follows:

- What is a Regression Model?

- Use Cases of Regression Models

- Interpretation of Regression Coefficients

- R-Squared and Room Mean Squared Error (RMSE)

- Fitting Curves to Data

- Multiple Regression

- Logistic Regression

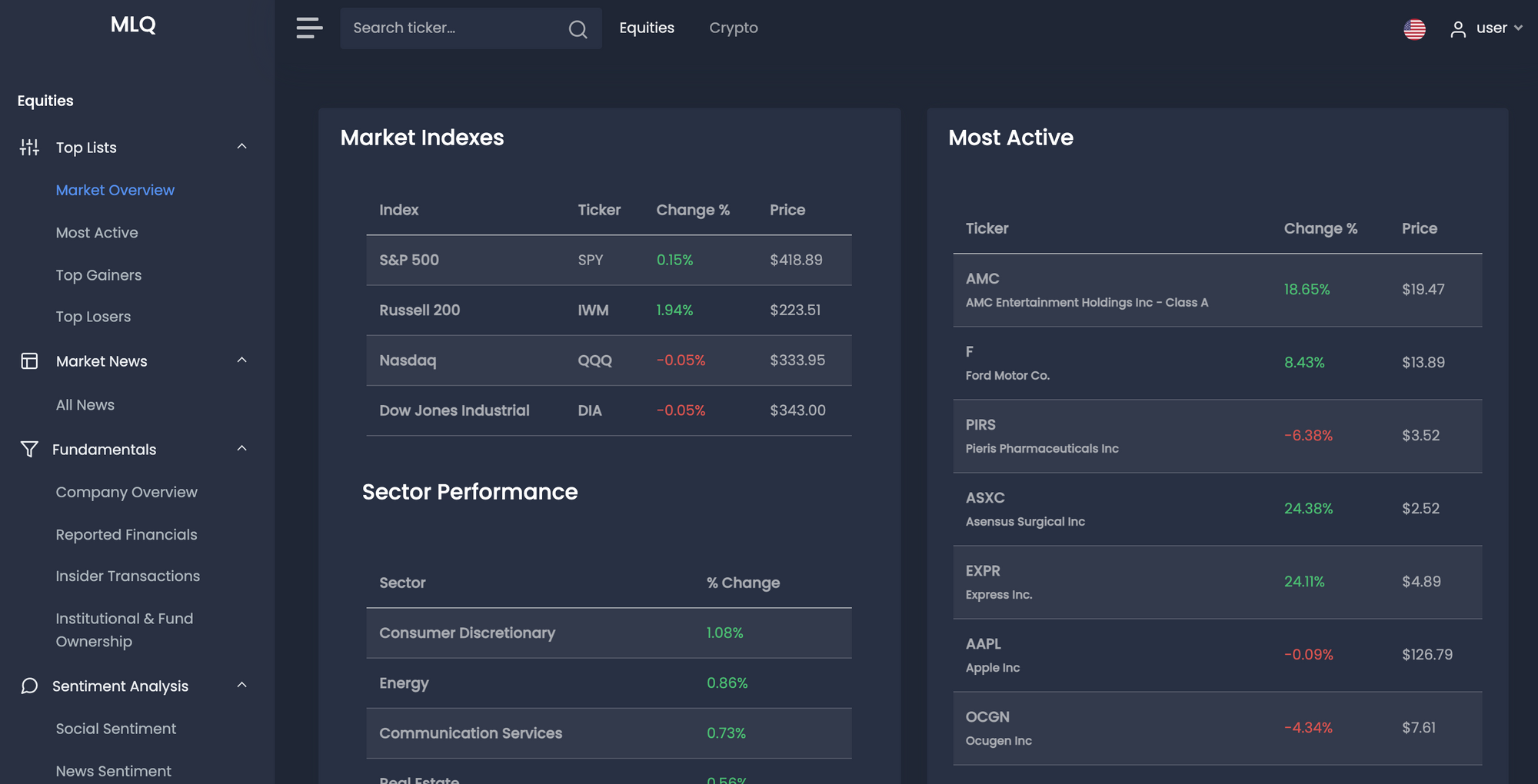

If you're interested in learning more about machine learning for trading and investing, check out our AI investment research platform: the MLQ app.

The platform combines fundamentals, alternative data, and ML-based insights.

You can learn more about the MLQ app here or sign up for a free account here.

This post may contain affiliate links. See our policy page for more information.

What is a Regression Model?

A simple regression model uses a single predictor variable $X$ to estimate the mean of an outcome variable $Y$, as a function of $X$.

For example, if we have a plot with the $X$ coordinate representing the weight of a diamond and the $Y$ coordinate representing the price, a regression model tells you what the price should be that at any given value of $X$.

In this case, the predictor variable is the diamond's weight and the outcome variable is the price. A regression model allows you to formalize the concept that a heavier diamond will have a higher price.

If the relationship between $X$ and $Y$ is modeled with a straight line, it is referred to as linear regression:

$$E(Y|X) = b_0 + b_1X$$

A commonly used number to describe linear association between two variables—or how close the points are to the line—is called correlation.

Correlation is denoted by the letter $r$ and will always be between -1 and + 1.

Negative correlation values indicate a negative association, positive values indicate a positive association, and a correlation of 0 means there is no linear association between the variables.

Stay up to date with AI

Use Cases of Regression Models

One of the most widely used aspects of regression models is for predictive analytics.

Using a regression model allows you to output a prediction interval that has a range of feasible values where the forecast is likely to fall.

As we'll discuss below, regression models also allow you to interpret coefficients from the model.

Returning to the diamond pricing example, regression models also provide a numerical measure of the amount of variability in the outcome that's explained by the predictive variables.

The regression line, or the line of best fit is commonly calculated using the method of least squares, which is also known as the optimality criteria.

The method of least squares works by finding the line that minimizes the sum of the squares of the vertical distance from the points to the line.

A key insight that the regression line allows for is to decompose the observed data into two components:

- The fitted values (the predictions)

- The residuals (the vertical distance from point to line)

Both of these components are useful as:

- The fitted values are the forecasts

- The residuals allow you to assess the quality of the fit

The residual values are often one of the most valuable aspects as they provide insight into the quality of the regression model itself, which can allow you to subsequently improve the model based on these observations.

Interpretation of Regression Coefficients

When you're using a quantitative model, it's always a good idea to interpret coefficients if you're able to, regardless if it's a deterministic or probabilistic model.

Interpreting coefficients allows you to articulate to others the purpose and insights from the model in a much more understandable way.

For example, let's say we have a regression model for a production process with the run size, or the number of units produced, on the $X$ axis and the time it took for each run on the $Y$ axis.

We can start by fitting a linear regression line on the data, which for this example can be written formally as $E(Y|X) = 182 + 0.22X$.

We can interpret this linear regression model as follows:

- $E(X|Y)$ can be understood as the expected, or average run time for a given run size.

- The intercept 182 is measured in units of $Y$, which are minutes. The intercept can be interpreted as the time it takes to do a run size of 0, which is more easily understood as the setup time in minutes.

- The slope 0.22 is multiplied by $X$ so it must be measured in $Y/X$, or minutes per item. The slope can be interpreted as 0.22 minutes per additional item, or the work rate in minutes per additional items.

Of course, the interpretation of coefficients will change based on the problem being solved, although this process of interpreting them in an understandable is a valuable part of the modeling process.

R-Squared and Room Mean Squared Error (RMSE)

When it comes to linear regression, two of the most commonly used single number summaries include R-squared ($R^2$) and Root Mean Squared Error (RMSE).

$R^2$ measures the proportion of variability in $Y$ that's explained by the regression model. It is the square of the correlation, $r$, and is expressed as a percentage.

An important point about $R^2$ is that there is no magic number, meaning that it does not need to be above a certain percentage for the model to be useful. Instead, $R^2$ can be thought of as a comparative benchmark as opposed to an absolute.

RMSE measures the standard deviation of the residuals, or the spread of the points about the fitted regression line.

A key difference between $R^2$ and RMSE are the units of measurement:

- $R^2$ is proportion, meaning it has no units of measurement

- RMSE is based standard deviation on the vertical distance of residuals from the line, which means RMSE has $Y$ as the unit of measurement

All other things being equal, we typically like higher values $R^2$, meaning the models explains more variability, and lower values of RMSE, meaning the residuals are closer to the regression line.

Using Root Mean Squared Error

One of the most useful applications of RMSE is as an input into a prediction interval.

Recall that when there is uncertainty in the model, you don't want to just have a single forecast, but instead want a range of uncertainty about the forecast.

With suitable assumptions we can use RMSE to come up with a prediction interval.

In this case, the assumption is that at a fixed value of $X$, the distribution of points about the true regression line follows a Normal distribution centered on the regression line. This is referred to as the Normality assumption.

These normal distributions all have the same standard deviation $\sigma$, which is estimated by RMSE. This is referred to as the constant variance assumption.

In other words, RMSE will be the estimate of the noise in the system with the assumption of normality.

With this information, we can come up with what's referred to as an approximate 95% prediction interval.

As a general rule of thumb and within the range of the observed data, using the Normality assumption and the Empirical Rule, an approximate 95% prediction interval is given by:

$$Forecast \pm 2 x RMSE$$

Part of the modeling process, however, is checking if these assumptions make sense. One way to check the Normality assumption, for example, is to plot the residuals using a histogram, which displays the distribution of the underlying data.

If the distribution is approximately bell shaped, this means there is no strong evidence against the normality assumption.

Fitting Curves to Data

So far we've only discussed fitting a regression line to data that exhibits a linear relationship. In practice, however, a line may not be an appropriate summary of the underlying data.

For example, if we are plotting demand for pet food measured in cases sold against the average price per case, a line may be a bad fit for the data. In this example, we can assume that as higher quantities are sold, the average price drops at an increasing rate due to bulk discounts available.

By simply plotting the data, we may see that there is a curvature in the data. If this is the case, we want to consider adding transformations to the data. By transformations, we mean applying a mathematical function to the data.

As discussed in our Introduction to Quantitative Modeling the basic transformations we can apply include:

- Linear

- Power

- Exponential

- Log

The log function is one of the most widely used transformations in practice. For example, if we apply a log transform to the price and quantity sold, the relationship appears much more linear.

The regression equation for the log-log model of this example is now:

$$E(log(Sales)|Price) = b_0 + b_1log(Price)$$

In general, when deciding which transformation to choose, we want to use the one that achieves linearity on the transform scale.

Multiple Regression

Beyond these simple regression models with a single input, we can also use a multiple regression model.

A multiple regression model allows for the inclusion of many predictor variables. For example, in the diamond dataset, we could add the color of the diamond to improve the model.

With two predictors, $X_1$ and $X_2$, the regression model is:

$$E(Y|X_1, X_2) = b_0 + b_1X_1 + b_2X_2$$

Logistic Regression

In this article we've been discussing linear regression, although regression can take many forms.

Linear regression is often the most appropriate if the outcome variable $Y$ is continuous.

In many business problems, however, the outcome variable is not continuous, but rather discrete, for example:

- Did the stock go up or down from one day to the next? Up/down

- Did a website visitor purchase a product? Yes/no

These outcomes can be viewed as Bernoulli random variables.

Logistic regression is used to estimate the probability that a Bernoulli random variable is a success. Logistic regression estimates this probability as a function of a set of predictive variables.

Summary: Regression Models

To summarize, regression models allow you to start with data and reverse engineer an approximation of the underlying processes. In particular, regression models reverse engineer the mean of $Y$ as a function of $X$.

In some cases we'll be able to use a simple linear regression model, although often we will need to transform the data if we see a curvature.

Regression models are an important tool for predictive analytics as they explicitly incorporate uncertainty in the underlying data. This uncertainty provides you with a range for these predictive forecasts.

In terms of summarizing the data, correlation is useful metric used to determine how close the points are to a line. Another important step in the quantitive modeling process is the interpretation of regression coefficients.

We also discussed R-squared and how the Root Mean Squared Error, or the standard deviation of the residuals, can be used to approximate 95% prediction intervals, assuming a Normal distribution in the data.

Finally, we discussed how multiple regression makes use of more than one predictor variable and that logistic regression is used when the outcome variables is dichotomous.