OpenAI held its spring update today, announcing the release of a new model called GPT-4o (“o” for “omni”) capable of reasoning across voice, text, and video. They also announced a desktop app, updated web UI, and more.

In this article, let's review the new GPT-4o model's key capabilities and other important updates from the event.

GPT-4o: Key Capabilities

- GPT-4o will be available to both free & paid users

- GPTs and the GPT store are available to free & paid users

- Vision enables you to upload and chat about images

- Browse allows you to search the web for responses

- Memory allows the model to remember things you discuss for future conversations

- Advanced data analysis allows you to upload data and create charts

- Increased speed of responses for 50 different languages

- Paid users will have up 5 times the capacity as free users

- GPT-4o is also available on the API and developers can start building with it today

- In terms of the GPT-4o API compared to GPT-4 turbo, it's is 2x faster, 50% cheaper, and has 5x higher rate limits

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: https://t.co/MYHZB79UqN

— OpenAI (@OpenAI) May 13, 2024

Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. pic.twitter.com/uuthKZyzYx

As OpenAI's CTO, Mira Murati stated:

GPT-4o provides GPT-4 intelligence but is much faster, and it improves on its capabilities across text, audio, and video.

We are looking at the future of interaction between ourselves and machines, and we think that GPT-4o is really shifting the paradigm into the future of collaboration.

Murati discussed how the current implementation of voice mode requires 3 underlying models, 1) transcription, 2) intelligence, and 3) text-to-speech.

The issue is that with this setup there's a lot of latency that breaks the feeling of immersion with ChatGPT. As Murati stated:

With GPT-4o, this all happens natively. GPT-4o reasons across voice, text, and vision.

By bringing these all together, Murati explained that this allows them to bring their GPT-4 level intelligence to free users.

Real-time conversational speech

One of the demos OpenAI showed was real-time conversational speech, here are the key differences between this capability and the previous voice mode:

- You can now interrupt the model and don't have to wait for it to finish the response

- The model has real-time responsiveness, removing the previous 2-3 second delay

- The model picks up on emotion and can generate voice in a number of emotive styles

- You can share real-time video with the model

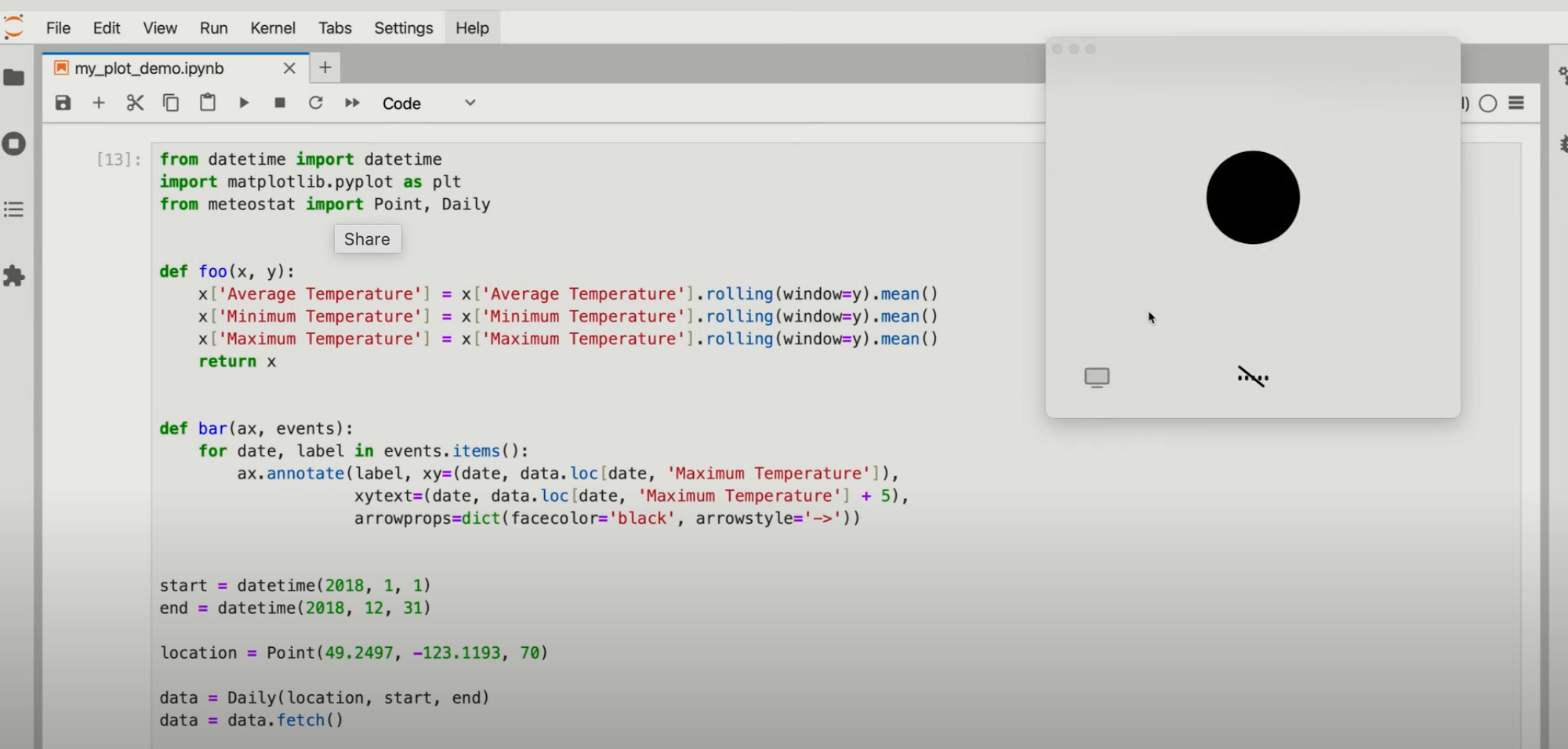

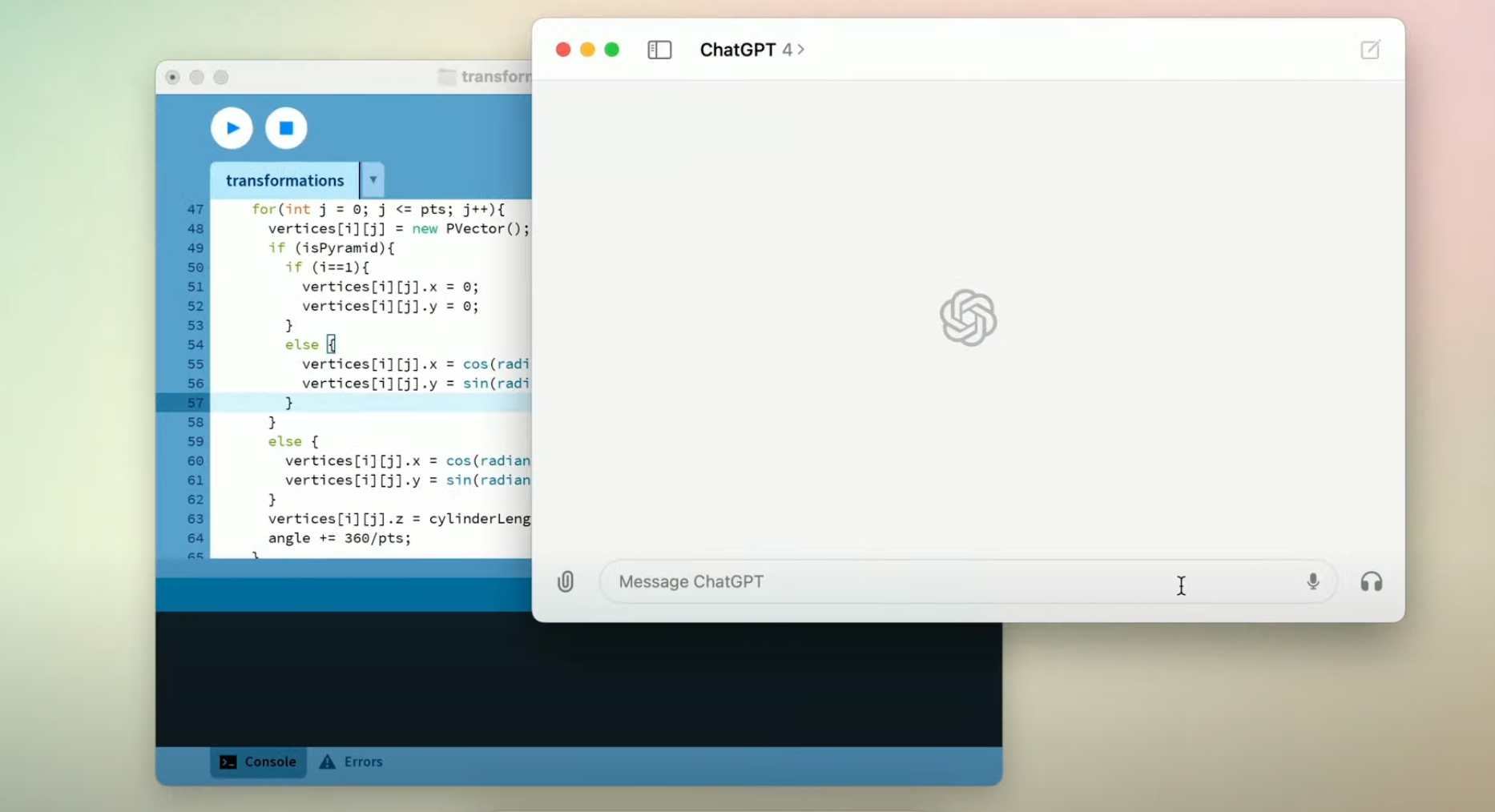

ChatGPT desktop app

Here are a few updates about the new desktop app:

- With this window open in the top right, ChatGPT will be able to hear everything you say

- It can use vision, or you copy the code or text it will send it to ChatGPT so you can discuss it

- If you enable vision, ChatGPT can see your screen and discuss what you're working on

Watch the full update below

You can also find Sam Altman's thoughts on GPT 4o below: