OpenAI has released yet another major update to the GPT-3.5 and GPT-4 API: function calling.

As OpenAI writes in their blog post:

Developers can now describe functions togpt-4-0613andgpt-3.5-turbo-0613, and have the model intelligently choose to output a JSON object containing arguments to call those functions.

This is a new way to more reliably connect GPT's capabilities with external tools and APIs.

If you've been following our tutorials, you know that this functionality was previously only possible with tools like LangChain, although bringing this capability directly into GPT API is, in my opinion, going to a massive change to programming and working with LLMs in general.

In particular, the ability to incorporate functional calls to external APIs solves the main limitation of the GPT models, namely, it's lack of access to external data sources and up-to-date information beyond the 2021 knowledge cutoff date.

This also means that users can effectively make their own API calls entirely with natural language, and GPT-4 will intelligently route that request to the appropriate function, and summarize the results in a user-friendly way.

In this guide we'll walk through how to get started with function calling using GPT-4 and walk through several examples from the documentation.

You can upload and analyze your own data & documents with prebuilt workflows. The agent also has access to to 10+ years of financial data, company news, financial statements, key metrics, earnings calls, and more.

Try it out for yourself here.

Understanding Function Calls in GPT-4

Next up, let's go through the example provided by OpenAI to understand how function calling works. As the docs highlight:

...you can describe functions togpt-3.5-turbo-0613andgpt-4-0613, and have the model intelligently choose to output a JSON object containing arguments to call those functions.

A key point is the the ChatCompletions model isn't actually executing the function, but rather it generates the JSON that can be used to perform the call.

Under the hood, functions are injected into the system message in a syntax the model has been trained on.

In other words, function calling allows us to reliably retrieve the structured data we need from GPT-4 to make our external API call.

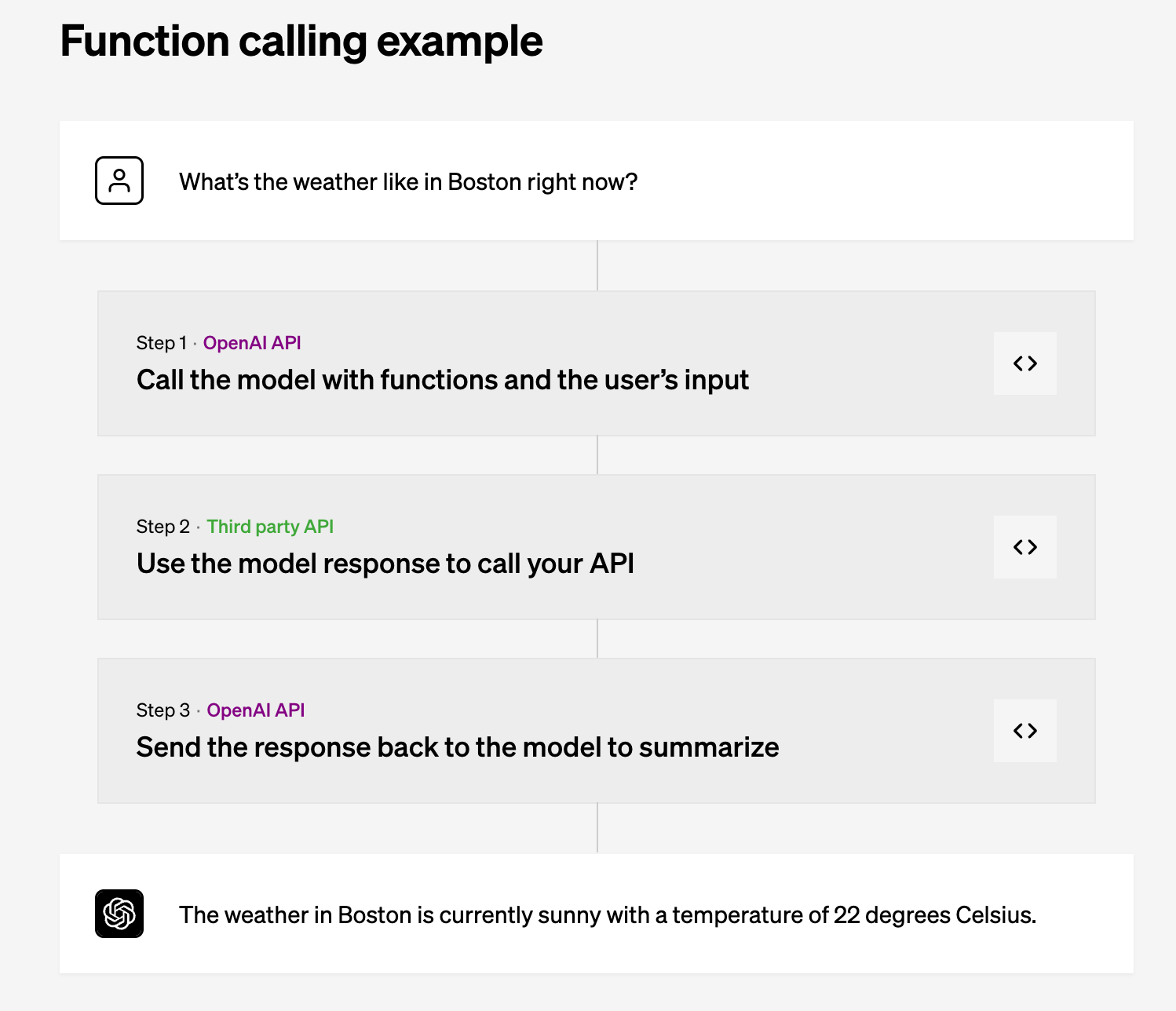

Here's a high-level overview of how function calling works:

- First we call with

ChatCompletionmodel with the user's query and define a set of functions that can be used in the functions parameter - Based on the user's query the model can then choose to call a function. If so, the content will be a stringified JSON object that adheres to the functions schema.

- We then parse the string into JSON and call our function with the arguments GPT-4 provides.

- Finally, we call the

ChatCompletionmodel again by appending the function response as a new message object, and then GPT-4 will summarize the results back the user.

Alright now that we know how function calling works, let's jump into the code.

Step 0: Installs & imports

For this example we'll work in Google Colab, so the first step is to install OpenAI, import openai & json into our notebook, and set our API key:

!pip install openaiimport openai

import json

openai.api_key = "YOUR-API-KEY"Step 1: Defining functions for the model

Next, we send the user's query along with the functions that it has access to. In the example provided in OpenAI's documentation, they've just hard-coded a dummy API call the get the weather.

- The model needs to be aware of the available functions it can call. These functions can be defined using the

functionsparameter during an API call. For example: - In this example, a dummy function

get_current_weatherhas been defined which will just return the same weather

# Example dummy function hard coded to return the same weather

# In production, this could be your backend API or an external API

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location": location,

"temperature": "72",

"unit": unit,

"forecast": ["sunny", "windy"],

}

return json.dumps(weather_info)Step 2: Send the user's query & functions to GPT

Now that we've defined this dummy function, we can proceed to make a GPT API call.

- This call should include the user query

What's the weather like in Boston? - We also define the list of

functionsthat the model can use i.e. in this case jsut the dummyget_current_weatherfunction. Here we include the function name, description, and parameters. - We've also set

function_call="auto", meaning GPT will automatically choose which function to use based on the user's query. - If we want to force the model to not use any functions and just generate a user-facing message, we can just set

function_call: "none"

# Step 2, send model the user query and what functions it has access to

def run_conversation():

response = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[{"role": "user", "content": "What's the weather like in Boston?"}],

functions=[

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

],

function_call="auto",

)

message = response["choices"][0]["message"]Step 3: Parsing the Function Call Response

Next, we'll check if the model has chosen to call a function.

- Upon receiving the function call from the model, we parse the string into JSON in your code and call your function using the provided arguments.

# Step 3, check if the model wants to call a function

if message.get("function_call"):

function_name = message["function_call"]["name"]

function_args = json.loads(message["function_call"]["arguments"])

Step 4: Feeding the Function Response Back to the Model

Next up, we call the GPT model again by appending the function response as a new message. This will allow the model to summarize the results back to the user:

# Step 4, send model the info on the function call and function response

second_response = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[

{"role": "user", "content": "What is the weather like in boston?"},

message,

{

"role": "function",

"name": function_name,

"content": function_response,

},

],

)

return second_response

print(run_conversation())Here we get the following response, which contains the the hard-coded function response:

{

"id": "chatcmpl-7S25z4Vx8lR8HcvmjlzzDoXDwNVpw",

"object": "chat.completion",

"created": 1686915595,

"model": "gpt-4-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The weather in Boston is currently sunny and windy with a temperature of 72 degrees."

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 73,

"completion_tokens": 17,

"total_tokens": 90

}

}As you can see, this approach creates a more structured way for generating model responses in JSON format and integrating external APIs or data into our ChatCompletion API calls.

Summary: GPT-4 Function Calling

Function calling is a major update in GPT-4 and 3.5 turbo model, giving developers an essential component needed to call external APIs, namely by having the model output a JSON object containing arguments to call functions.

- Function calling solves a major limitation of GPT models, i.e., their inability to access external data sources and up-to-date information beyond the 2021 knowledge cutoff date.

- Users can make API calls entirely using natural language, and GPT-4 intelligently routes the request to the appropriate API function and interprets the results.

As mentioned, in my opinion this is a major step in LLM programming as it integrating GPT API calls with external APIs is now significantly easier. This opens up such a wide range of possibilities, it'll be interesting to see how the ever-growing Cambrian explosion of AI apps begin to integrate this functionality.