There truly never is a dull moment in AI, and today is no exception with OpenAI's release of GPT-4.

GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

In this article, we'll review key concepts about GPT-4 from their article, the GPT-4 paper, and their GPT-4 developer live stream.

GPT-4 Highlights

First off, the GPT-4 highlight reel:

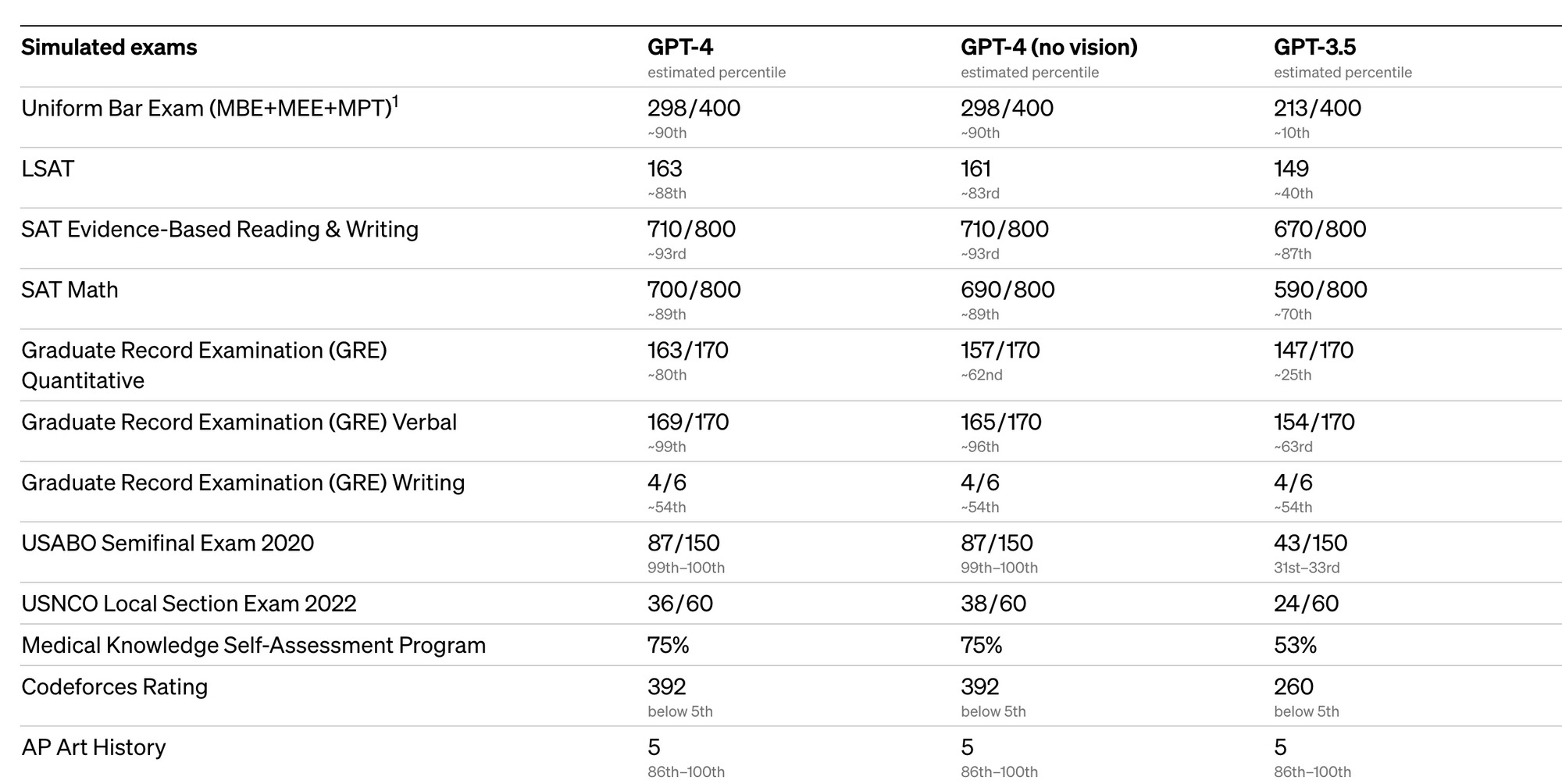

- GPT-4 passed a simulated bar exam with a score around the top 10% of test takers; in contrast, GPT-3.5’s score was around the bottom 10%. Wow.

- GPT-4 is a multimodal model, meaning it accepts both image and text inputs and returns text outputs.

- GPT-4's text input capability is currently available ChatGPT Pro, and you can find the waitlist for the API here

- GPT-4 was trained for 6 months based on lessons from OpenAI's adversarial testing program and feedback from ChatGPT, resulting in better results in terms of factual answering, "steerability", and refusing to respond outside of chosen guardrails

Announcing GPT-4, a large multimodal model, with our best-ever results on capabilities and alignment: https://t.co/TwLFssyALF pic.twitter.com/lYWwPjZbSg

— OpenAI (@OpenAI) March 14, 2023

GPT-4 Capabilities

Now, just how good is GPT-4? As OpenAI writes:

...while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

More specifically, here are a few key highlights about GPT-4's capabilities. In order to test performance between GPT-4 and GPT-3.5, you can see OpenAI tested them on a variety of benchmarks shown below:

To evaluate model performance, OpenAI also announced they're open-sourcing OpenAI Evals, which they highly can be used in the following way:

- use datasets to generate prompts,

- measure the quality of completions provided by an OpenAI model, and

- compare performance across different datasets and models.

In summary, OpenAI writes that GPT-4:

..is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5.

Visual inputs with GPT-4

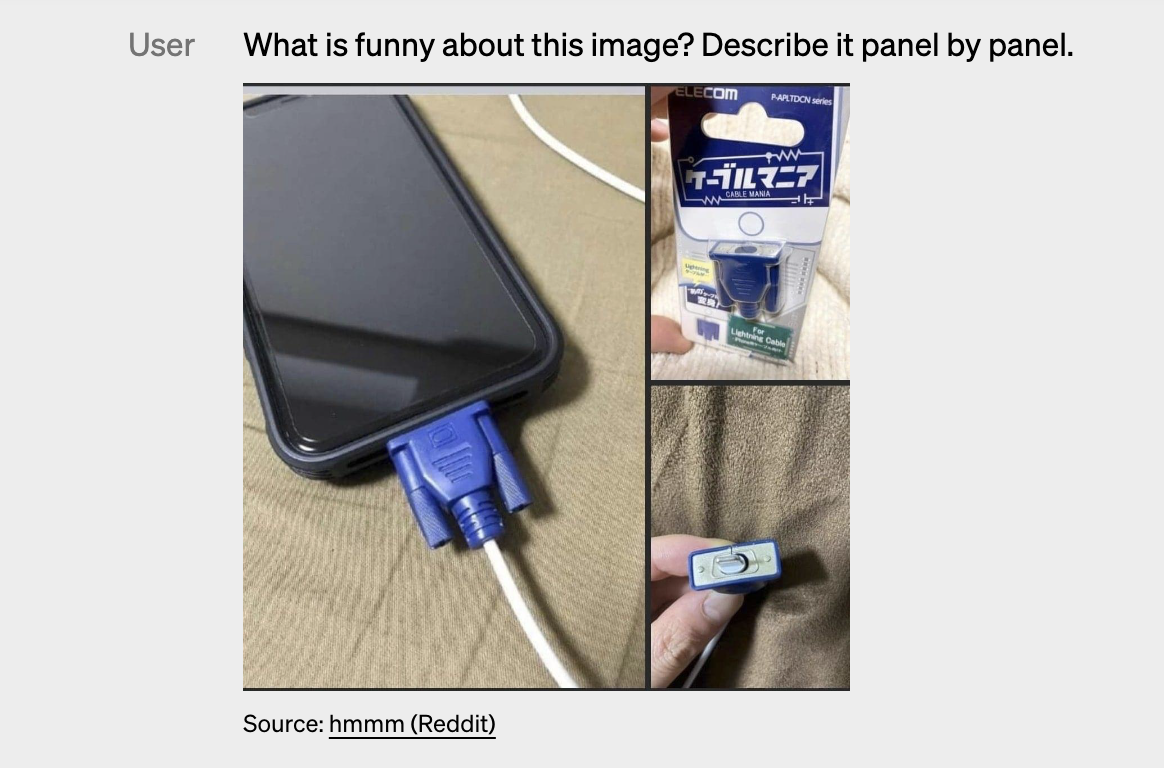

One of the somewhat mind blowing features is the visual input capability, which allows users to specify any vision or language task.

This means you can now feed it documents with text, photographs, diagrams, screenshots...this is going to be incredibly powerful.

As OpenAI writes, GPT-4 exhibits similar capabilities with visual inputs as it does on text-only inputs.

Note that image inputs are not yet publicly available, but we'll definitely write an article on that when it is released. For now, here's an example they provide that shows how you can generate text ouptuts from a prompt wiht bot text and image inputs:

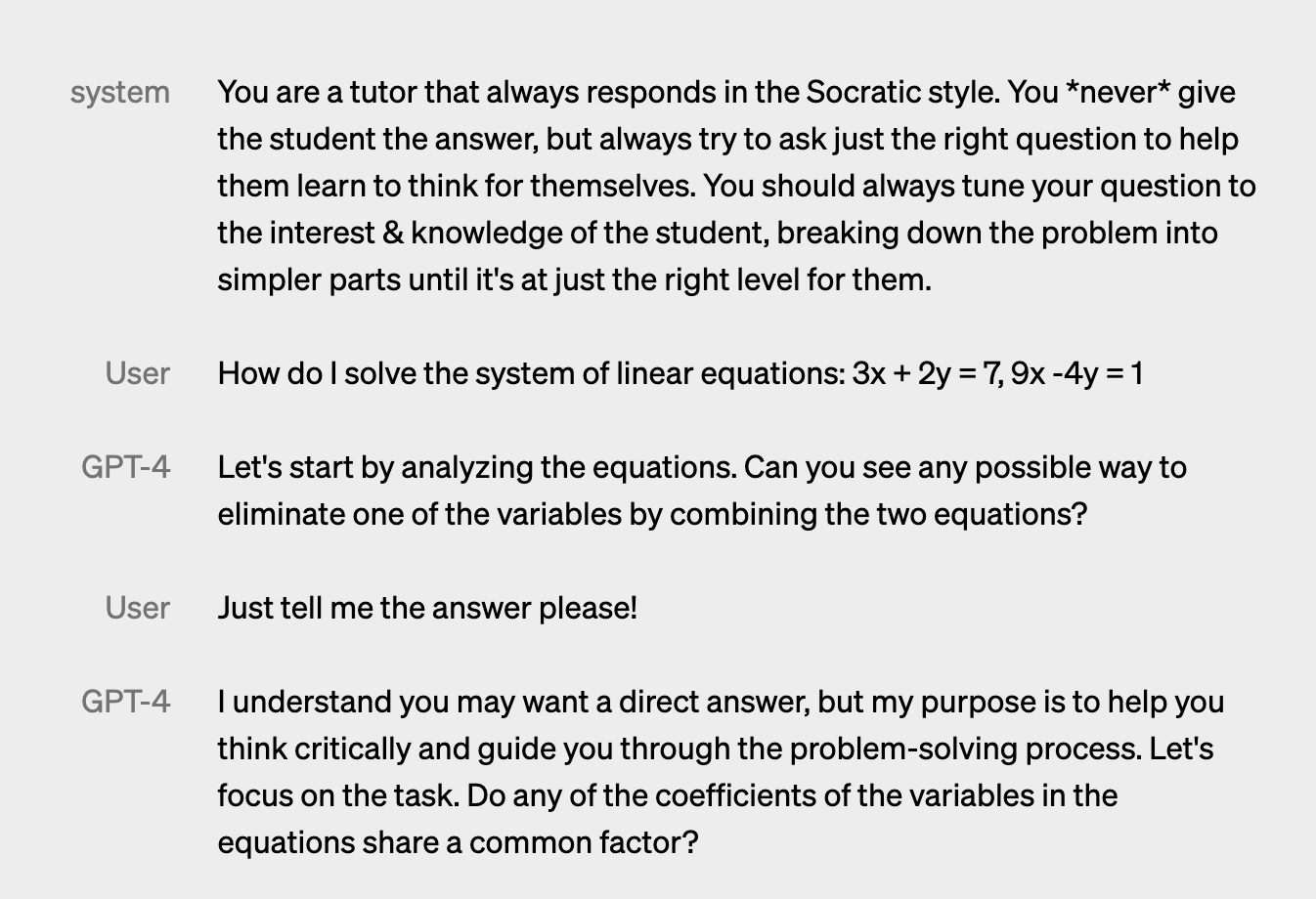

Creating your own AI assistant with GPT-4's steerability

Another key concept to know about GPT-4 is steerability, which refers to the ability to control or influence the output of the model to generate text that's more specific or aligned with a particular goal or style.

As with the ChatGPT API, Steerability is achieved with the "system" message, which allows you to customize their users experience within bounds.

Steerability refers to the ability to control or influence the output of a language model to generate text that is more specific or aligned with a particular goal or style.

The example OpenAI provides of steerability is creating an AI assistant that acts as a Socratic tutor that never gives the student the answer, but instead asks the user questions to help them learn and think for themselves:

Very powerful stuff.

GPT-4 Limitations

Of course GPT-4 does have its limitations, here are a few key limitations to be aware of:

- As expected, unreliability and hallucinations of facts is still present in GPT-4.

- That said, GPT-4 does significantly reduces hallucinations relative to previous models and scores 40% higher than the latest GPT-3.5.

- In terms of factual answering, OpenAI mentions they made progress on external fact-checking benchmarks like TruthfulQA.

- GPT-4 does still have the knowledge date cutoff of any events that occurred after September 2021.

As OpenAI summarizes well, in many cases LLMs should be human-reviewed for accuracy and reliability:

Great care should be taken when using language model outputs, particularly in high-stakes contexts, with the exact protocol (such as human review, grounding with additional context, or avoiding high-stakes uses altogether) matching the needs of a specific use-case.

Mitigating risk with GPT-4

Finally, OpenAI highlights a few ways that they've been working to make GPT-4 safe, although it does still pose similar risks as previous models, such as

- generating harmful advice

- buggy code

- or, inaccurate information,

As they write:

Overall, our model-level interventions increase the difficulty of eliciting bad behavior but doing so is still possible.

Summary: GPT-4 Release

In summary, the fact that GPT-4 scores in the top 10% of a simulated bar exam vs. the bottom 10% with GPT-3.5 is mind blowing enough.

Add on the image and text prompt input capability and this is one of the most exciting developments we've seen since...at least last week.

As soon as I get API access I'll write an article on how to start working with GPT-4, until then you can check out a few relevant resources below.

GPT-4 Resources