In our previous articles, we discussed how LangChain is trying to be the missing piece for building more advanced LLM applications on top of base models like GPT-3:

...using these LLMs in isolation is often not enough to create a truly powerful app - the real power comes when you are able to combine them with other sources of computation or knowledge.

Specifically, in our previous guide on LangChain, we saw how we can use the library to work with external documents using their Document Loaders and OpenAI's Embeddings API to upload a PDF and be able to answer questions about the document.

In this guide, we'll expand on this and build a more complete research assistant that's trained on multiple documents. After we've computed the embeddings of multiple documents, we'll see how we can use Pinecone to store these vectors for use later. As highlighted on Pinecone's site:

The Pinecone vector database makes it easy to build high-performance vector search applications.

If you're unfamiliar with vector databases, you can check out Pinecone's guide on the topic here, which says:

Many organizations would benefit from storing and analyzing complex data, but complex data can be difficult for traditional databases built with structured data in mind.

Vector databases are purpose-built to handle the unique structure of vector embeddings. They index vectors for easy search and retrieval by comparing values and finding those that are most similar to one another.

By combining OpenAI's Embeddings & Completions API, LangChain, and Pinecone, we'll see how we can get one step closer to building an end-to-end GPT-3 enabled research assistant.

For this project, I'll be building a research assistant for two sub-fields of machine learning I'm particularly interested in: prompt engineering and reinforcement learning.

Specifically, I've retrieved 50+ academic papers on these subjects that I'll be computing the embeddings for, and then will store these vector embeddings at Pinecone so I can ask questions about these topics at any time.

The next step will be to connect these Pinecone embeddings to an app that will serve as a research assistant, but for now, let's see how we can use LangChain to compute the embeddings for multiple documents.

The steps we need to take include:

- Use LangChain to upload and preprocess multiple documents

- Compute the embeddings with LangChain's

OpenAIEmbeddingswrapper - Index and store the vector embeddings at PineCone

- Using GPT-3 and LangChain's

question_answeringto query these documents

1. Installs & imports

First off, we need to install the following lirares and system dependencies into our Colab notebook:

langchainopenaiunstructuredpython-magicchromadbpinecone-clientdetectron2layoutparserlayoutmodelstesseractPillow

Next, we can import the necessary libraries into our notebook and set our OpenAI API key as well as our Pinecone API key as follows:

import os

import openai

import pinecone

import langchain

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain import OpenAI, VectorDBQA

from langchain.document_loaders import DirectoryLoader

from langchain.document_loaders import UnstructuredFileLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import Chroma, Pinecone

from langchain.embeddings.openai import OpenAIEmbeddings

import magic

import nltk

nltk.download('punkt')

PINECONE_API_KEY = 'YOUR-API-KEY'

PINECONE_API_ENV = 'YOUR-API-ENV'

os.environ["OPENAI_API_KEY"] = "YOUR-API-KEY"2. Loading documents and splitting text

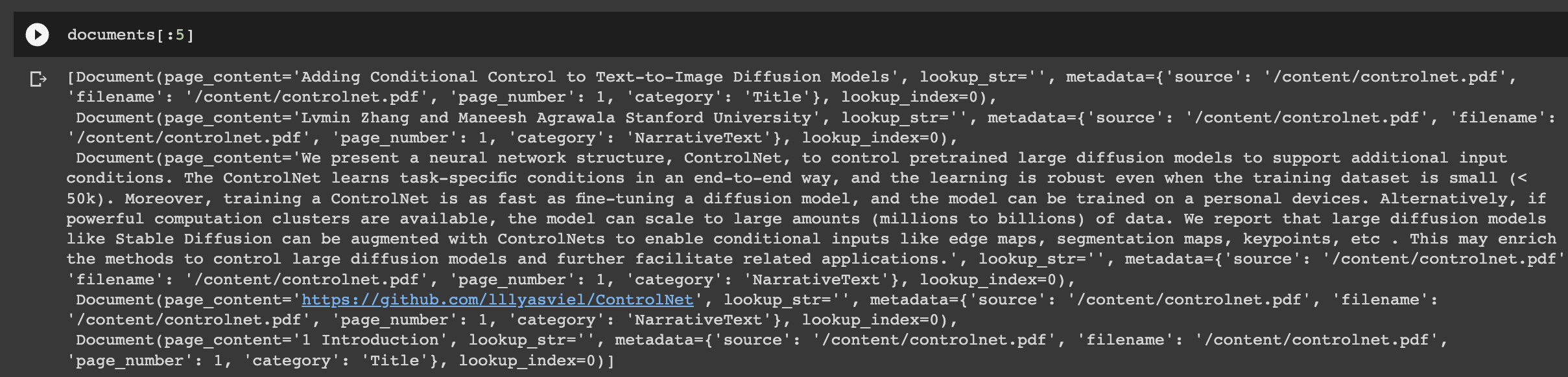

Next, I'm going to mount my Google Drive to my Colab instance. In this case, I've stored the 50+ academic papers in a folder in my drive and I can use LangChain's Directory Loader in order to load all documents in a directory.

Under the hood, DirectoryLoader uses the Unstrcured File Loader which works with PDFs, text files, powerpoints, HTML, images, and other file types. Note that using LangChain to load these 50+ papers from my directory does take a few hours:

from google.colab import drive

drive.mount('/content/drive')

loader = DirectoryLoader('PATH-TO-DRIVE-FOLDER/', glob='**/*.pdf')

documents = loader.load()

documents[:5]

Next, we need to split our documents into smaller subsections so that we can retrieve relevant context from a paper and add it to our prompt without running into token limits.

To do this, we can use the CharacterTextSplitter class from the langchain.text_splitter module. In this case, we'll split each section into a chunk_size of 1000 characters:

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = text_splitter.split_documents(documents)3. Getting embeddings for semantic search

Now that we've got our documents uploaded and preprocessed into the right format, we can go and use the OpenAIEmbeddings class from the langchain.embeddings.openai module to compute the embeddings for each subsection of text:

embeddings = OpenAIEmbeddings(openai_api_key=os.environ['OPENAI_API_KEY'])4. Storing the vector embeddings at Pinecone

After computing the embeddings for the 50+ papers, we want to store them so that we don't need to recompute them each time, which is where Pinecone comes in.

Here's an overview of how this works:

- First, we can initialize Pinecone with the

pinecone.initcommand and ourPINECONE_API_KEYandPINECONE_API_ENVvariables. - The

Pinecone.from_textsmethod is then used to create aPineconeinstance from the text content of the chunks and the embeddings, with theindex_nameparameter set to"mlqai". - The resulting

docsearchobject is then used for semantic search to retrieve relevant document sections to the user's question

# initialize pinecone

pinecone.init(

api_key=PINECONE_API_KEY, # find at app.pinecone.io

environment=PINECONE_API_ENV

)

index_name = "mlqai"

docsearch = Pinecone.from_texts([t.page_content for t in texts], embeddings, index_name=index_name)

5. Querying documents

Now that we've got our vector embeddings indexed and stored at Pinecone we can use LangChain once again to query our documents using the OpenAI and load_qa_chain classes from the langchain.llms and langchain.chains.question_answering modules to create a question-answering assistant.

In this case, we'll set the LLM to OpenAI with a llm object is created with temperature=0 since we want more factual, predictable answers.

Here's an overview of how this works:

- We'll also use the

load_qa_chainmethod to load a pre-trained question-answering model from OpenAI. - The

chainobject is created with our OpenAIllmand thechain_typeparameter is set to"stuff". - We then use the

docsearch.similarity_searchto find the most similar documents to the user's query - In this case, I've asked it to "Tell me about Asynchronous Methods for Deep Reinforcement Learning", which is one of the papers that I trained it on

- Finally, we just use

chain.runto run the loaded question-answering model on the documents returned from the semantic search

from langchain.llms import OpenAI

from langchain.chains.question_answering import load_qa_chain

llm = OpenAI(temperature=0, openai_api_key=os.environ['OPENAI_API_KEY'])

chain = load_qa_chain(llm, chain_type="stuff")

query = "Tell me about Asynchronous Methods for Deep Reinforcement Learning"

docs = docsearch.similarity_search(query, include_metadata=True)

chain.run(input_documents=docs, question=query)

Here's the response:

Asynchronous Methods for Deep Reinforcement Learning is a conceptually simple and lightweight framework for deep reinforcement learning that uses asynchronous gradient descent for optimization of deep neural network controllers. It presents asynchronous variants of four standard reinforcement learning algorithms and shows that parallel actor-learners have a stabilizing effect on training allowing all four methods to successfully train neural network controllers. The best performing method, an asynchronous variant of actor-critic, surpasses the current state-of-the-art on the Atari domain while training for half the time on a single multi-core CPU instead of a GPU. Furthermore, it has been shown to succeed on a wide variety of continuous motor control problems as well as on a new task of navigating random 3D mazes using a visual input.

Not bad. We can see that's directly pulled from the abstract and introduction of the paper, so everything looks to be working well.

Summary: Building a GPT-3 Enabled Research Assistant

In this guide, we saw how we can combine OpenAI, GPT-3, and LangChain for document processing, semantic search, and question-answering.

We also saw how we can the cloud-based vector database Pinecone to index and semantically similar documents. In particular, my goal was to build a research assistant for two subfields of machine learning that I'm particularly interested in: prompt engineering and reinforcement learning.

In this next article, we'll see how we can put all this together and build out a front-end app using Streamlit for our GPT-3 enabled research assistant.