There's no question that large language models (LLMs) have taken the world by storm in recent months, and their prevalence in apps is undoubtedly going to increase in the coming years.

As you start working with the LLMs like GPT-3 and the ChatGPT API, however, you'll notice there are several limitations when it comes to building more complex LLMs applications.

While the base models are of course incredibly impressive in their own right, there are a few missing pieces when it comes to building end-to-end apps , such as:

- Making it easy to work with external documents or databases

- Keeping track of short and long-term chat memory

- Building out multi-step workflows to search the internet, interact with other external tools, and so on

To solve these challenges and enable developers to build more complex LLM-enabled applications, this is where LangChain comes in.

As the LangChain documentation highlights:

Large language models (LLMs) are emerging as a transformative technology, enabling developers to build applications that they previously could not. But using these LLMs in isolation is often not enough to create a truly powerful app - the real power comes when you are able to combine them with other sources of computation or knowledge.

More specifically, LangChain provides a variety of modules that drastically increase the power of what you can accomplish with LLMs, such as:

- Prompts: Including prompt templates, management, optimization, and serialization

- Document loaders: Making it much easier to load and feed our LLM unstructured data from a variety of sources and formats.

- Chains: Chains allow you to combine multiple LLM calls in a sequence enabling more complex workflows.

- Agents: Agents enable LLMs to make observations and decisions on which actions to take to accomplish a particular task.

- Memory: LangChain provides a standard interface for maintaining short and long-term memory of previous interactions

There's a lot to cover for each of the modules in the LangChain library, although as we'll see, the true power of LangChain comes when you combine multiple modules interacting with one another.

In this guide, let's get familiar with a few more key concepts and walk through their Quickstart guide to see how we can get started with the library. In particular, we'll discuss:

- LLMs: the basic building block of LangChain

- Prompt templates

- Chains: Combining LLMs and prompts with a multi-step workflow

- Agents: Dynamically call chains based on user input

- Memory: Adding state to chains and agents

Let's get started.

Stay up to date with AI

LLMs: the basic building block of LangChain

First things first, if you're working in Google Colab we need to !pip install langchain and openai set our OpenAI key:

import langchain

import openai

import os

os.environ["OPENAI_API_KEY"] = "YOUR-API-KEY"Next, let's check out the most basic building block of LangChain: LLMs.

Large Language Models (LLMs) are a core component of LangChain. LangChain is not a provider of LLMs, but rather provides a standard interface through which you can interact with a variety of LLMs.

For this example, let's stick with GPT-3 and import OpenAI as follows:

from langchain.llms import OpenAI

Next, we can do a simple API call for some text input as follows:

llm = OpenAI(temperature=0.7)

text = "What is a good name for an AI that helps you fine tune LLMs."

print(llm(text))The result: "OptimAIzr"...not bad...I guess.

Prompt templates

Ok simple enough, next let's build on this with a prompt template building block.

Language models take text as input - that text is commonly referred to as a prompt. Typically this is not simply a hardcoded string but rather a combination of a template, some examples, and user input. LangChain provides several classes and functions to make constructing and working with prompts easy.

Instead of hard coding the product for our simple name generator, we can use initialize a PromptTemplate and define the input_variables and template as follows:

from langchain.prompts import PromptTemplate

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)print(prompt.format(product="AI robots"))Here we can see our full prompt now is "What is a good name for a company that makes AI robots?"

Chains: Combining LLMs and prompts with a multi-step workflow

Now let's combine the previous two building blocks with a chain:

Using an LLM in isolation is fine for some simple applications, but many more complex ones require chaining LLMs - either with each other or with other experts.

A chain can be made up of multiple links, which can either be primitives (i.e. an LLM) or other chains.

The most basic type of chain we can use is an LLMChain, which includes a PromptTemplate and an LLM.

In this case, we can chain together our previous template and LLM as follows:

llm = OpenAI(temperature=0.7)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)from langchain.chains import LLMChain

chain = LLMChain(llm=llm, prompt=prompt)chain.run("AI robots")"RoboMindTech"...nice.

Ok now we've put together two simple building blocks, let's check out one of the most powerful features of LangChain: agents.

Agents: Dynamically call chains based on user input

Up until now, our chains have run in a simple predetermined order: prompt template -> user input -> LLM API call -> result.

Agents, on the other hand, use an LLM to determine which actions to take and in what order. These actions can include using an external tool, observing the result, or returning it to the user.

Agents are systems that use a language model to interact with other tools. These can be used to do more grounded question/answering, interact with APIs, or even take actions.

The potential of building personal assistants with agents seems quite limitless...as LangChain highlights:

These agents can be used to power the next generation of personal assistants - systems that intelligently understand what you mean, and then can take actions to help you accomplish your goal.

In order to make use of LangChain agents, a few concepts include:

- Tool: This is a function that performs a specific duty, such as Google Search, Database lookup, Python REPL, and other chains.

- Agent: The agent to use, which are a string that references a support agent class. There are a number of LangChain-supported agents and you can also build out your own custom agents.

You can find a full list of predefined tools here and a full list of supported agents here.

To better understand this, let's look at two things that we know ChatGPT can't do: search the internet after 2021 and (as of today) perform more complex mathematical operations.

First, we'll need to !pip install google-search-results and set our os.environ["SERPAPI_API_KEY"] = "YOUR-API-KEY" which you can get here.

Next, we can load in the serpapi and llm-math tools and initialize our agent with zero-shot-react-description as follows.

In this case, I've asked for Tesla's market cap as of March 3rd, 2023 and ask it how many times bigger it is than Ford's market cap:

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

# First, let's load the language model we're going to use to control the agent.

llm = OpenAI(temperature=0)

# Next, let's load some tools to use. Note that the `llm-math` tool uses an LLM, so we need to pass that in.

tools = load_tools(["serpapi", "llm-math"], llm=llm)

# Finally, let's initialize an agent with the tools, the language model, and the type of agent we want to use.

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

# Now let's test it out!

agent.run("What is Tesla's market capitalization as of March 3rd 2023, how many times bigger is this than Ford's market cap on the same day?")

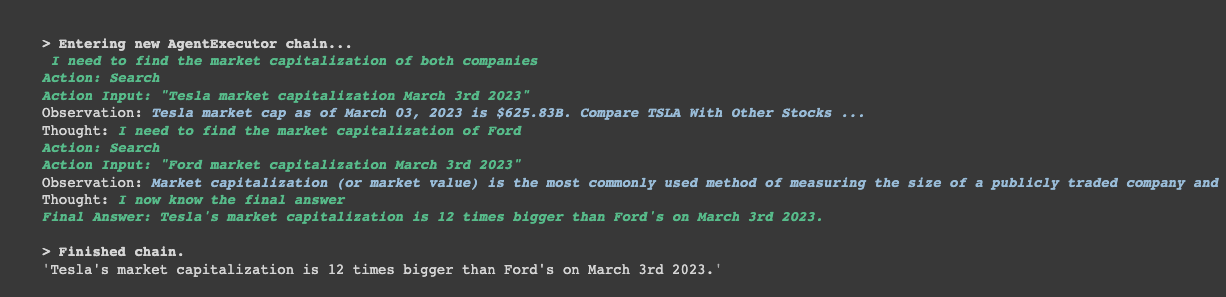

We can see the agent:

- Enters a new AgentExecutor chain and states "I need to find the market capitalization of both companies"

- It then does the following search: "Tesla market capitalization March 3rd 2023"

- Obversves that on March 03, 2023, the Tesla market cap is $625.83B

- Inputs a search for Ford's market cap on March 3rd 2023 and observes $52.14B

- The agent then has a "thought" that it knows the final answer...

Tesla's market capitalization is 12 times bigger than Ford's on March 3rd 2023.

Very. cool.

Of course, it should be noted that since it's simply performing Google searches and if the search results are incorrect we could end up with incorrect answers, so as always these LLM responses should be double-checked.

Memory: Adding state to chains and agents

Lastly, memory is another interesting feature of LangChain, which provides state to our chains and agents.

The clearest and simple example of this is when designing a chatbot - you want it to remember previous messages so it can use context from that to have a better conversation.

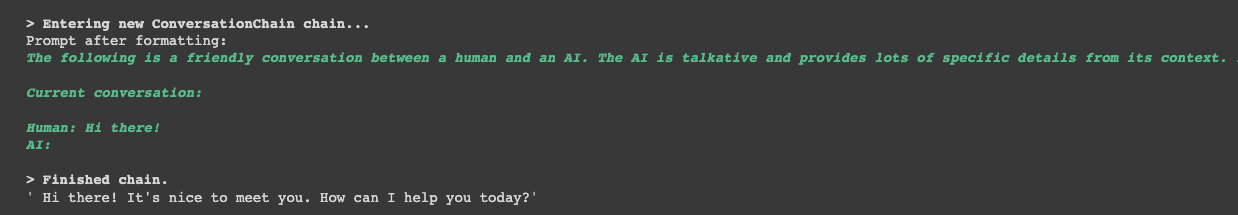

In order to add memory to LangChian, we can simply import ConversationChain and use it as follows:

from langchain import ConversationChain

llm = OpenAI(temperature=0)

conversation = ConversationChain(llm=llm, verbose=True)

conversation.predict(input="Hi there!")

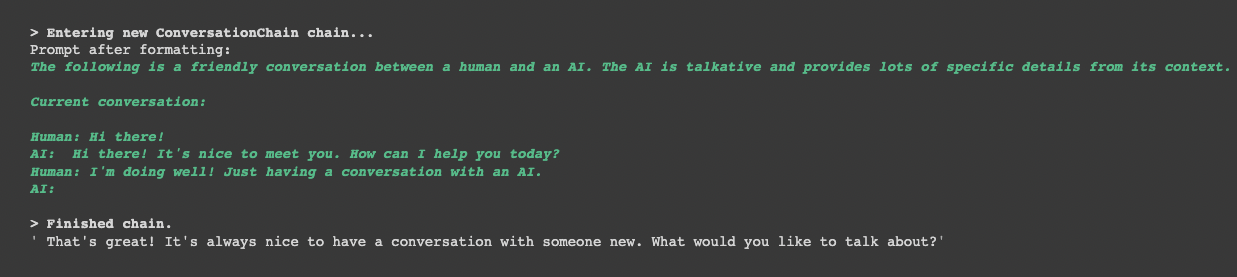

We can continue the conversation and see it has memory of all the previous interactions:

conversation.predict(input="I'm doing well! Just having a conversation with an AI.")

Summary: Getting started with LangChain

As we've seen, LangChain provides multiple building blocks for building out more complex LLM applications that involve multi-step workflows, third party tools, and memory.

There's a lot more to cover on each of these topics, but the concepts of agents alone has my mind spinning with potential applications...it will be exciting to see which ones take shape.

My first thought is that you can build a pretty powerful research assistant by combining these tools, for example:

- Loading and parsing restructuring data from multiple sources

- Combining prompts and chains to perform a multi-step research workflow

- Use agents and third-party tools to augment existing data with external resources

- Finally improving the results by providing feedback in the form of chat memory

In the next few articles, we'll walk through how we can bring this LangChain-powered research assistant to life...for now, I hope you're as excited as I am about the potential of this library and building more complex LLM-enabled applications.