While large language models like GPT-4 have propelled us into a new era of artificial intelligence, autonomous AI agents are what many say is the next big thing.

There have been already several impressive AI agent frameworks such as AutoGPT and BabyAGI, although these weren't always that reliable and would often get stuck in infinite loops, have issues with task prioritization, and so on.

That said, Microsoft just entered the AI agent space with the most impressive open source framework I've seen to date: AutoGen.

In this guide, we'll look at what you can do with AutoGen, including key concepts, use cases, and how to get started with the framework.

What is AutoGen?

As the authors write:

AutoGen is a framework that enables development of LLM applications using multiple agents that can converse with each other to solve tasks.

AutoGen agents are customizable, conversable, and seamlessly allow human participation.

They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

As highlighted in the AutoGen paper, a few key advantages of the design includes:

- It is able to navigate the strong (yet imperfect) generation and reasoning capabilities of LLMs

- It leverages human input to augment LLMs, while still providing automation through this multi-agent conversation framework

- It simplifies the implementation of complex LLM workflows as automated agent chats

- It provides a replacement of

openai.Completionoropenai.ChatCompletionas an enhanced inference API.

Alright with that high-level overview, let's jump into a few code examples to see what they mean by all this.

Getting Started with AutoGen

First off, let's create a new notebook and install AutoGen as follows:

pip install pyautogen

Since we'll also be using GPT-4 under the hood, we'll need to pip install openai and set our OpenAI API key.

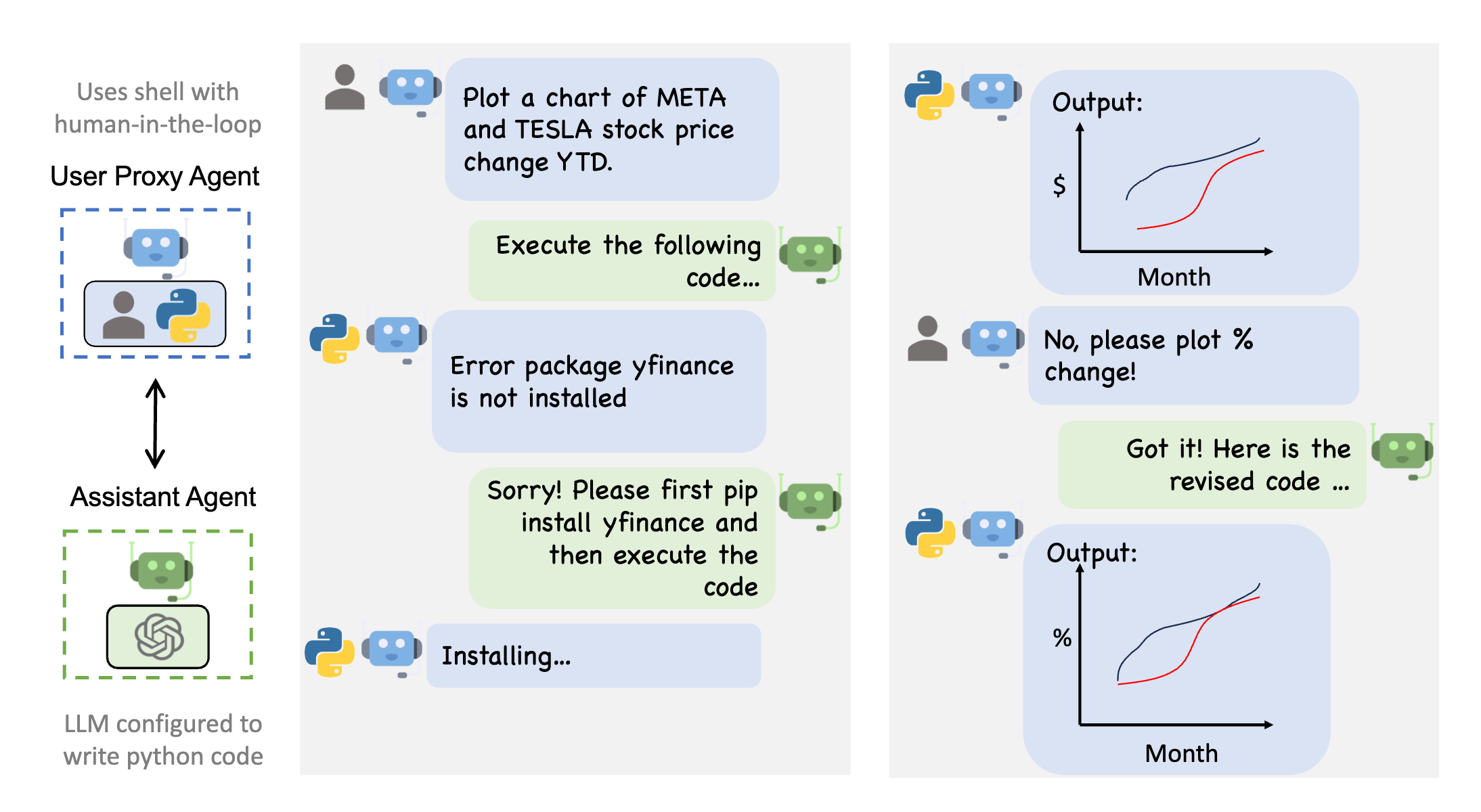

As mentioned, AutoGen provides a framework where multiple agents and humans can converse with each other to collectively accomplish tasks.

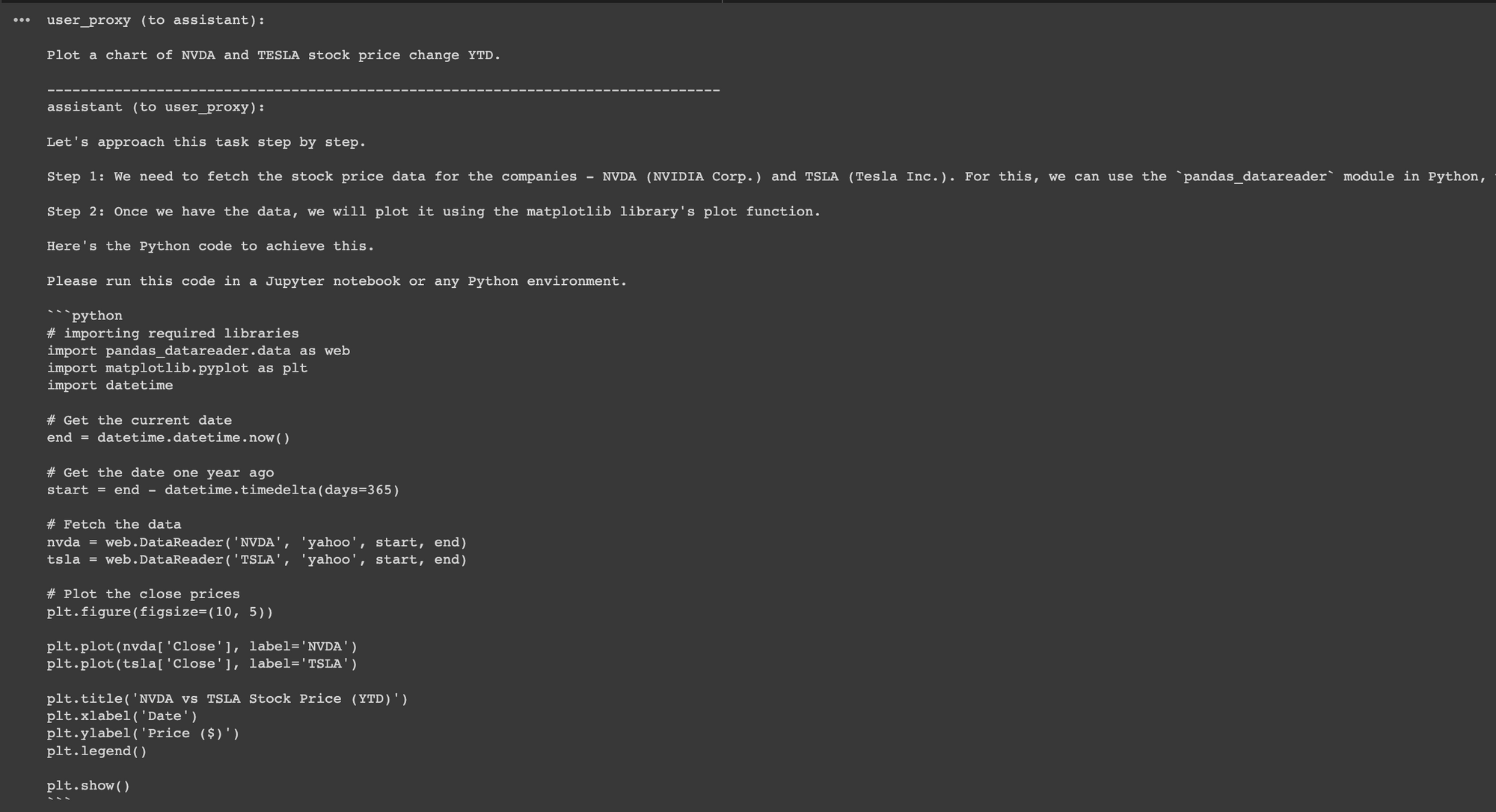

Here's the quick start code example for AutoGen:

from autogen import AssistantAgent, UserProxyAgent, config_list_from_json

# Load LLM inference endpoints from an environment variable or a file

config_list = config_list_from_json(env_or_file="OAI_CONFIG_LIST")

assistant = AssistantAgent("assistant", llm_config={"config_list": config_list})

user_proxy = UserProxyAgent("user_proxy", code_execution_config={"work_dir": "coding"})

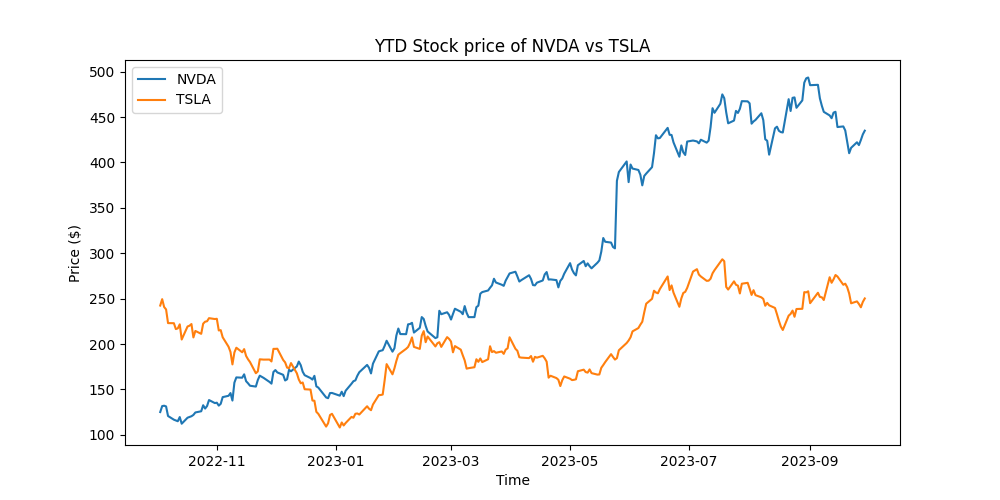

# Kickstart a conversation between the agents to plot a stock price chart

user_proxy.initiate_chat(assistant, message="Plot a chart of NVDA and TESLA stock price change YTD.")

As you can see, with this code the agent breaks to task of plotting a chart of NVDA and TSLA stock price change YTD into two tasks:

- Fetching the stock price data for the companies

- Once we have the data, we will plot it using Matplotlib

From there, the agent asks for feedback, or you can just click enter to execute the suggested steps.

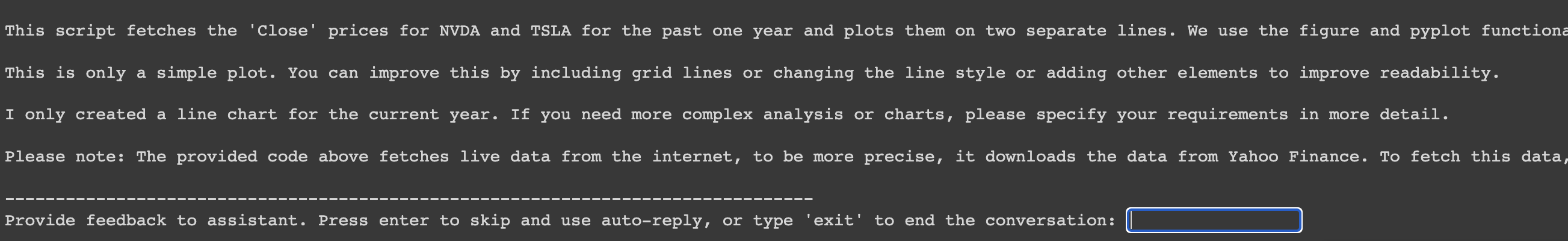

If I click enter for this example, we can see the initial code resulted in an error, which it was able to handle and resolve itself...very interesting.

If I click enter again, I can see it was able to pip install yfinance to retrieve stock prices and write the correct Matplotlib code to plot and save the chart:

Good start.

Multi-Agent Conversation Framework

Alright, now let's look at one of the key use cases highlighted in the docs, the multi-agent conversation framework, which:

AutoGen offers a unified multi-agent conversation framework as a high-level abstraction of using foundation models

By automating chat among multiple capable agents, one can easily make them collectively perform tasks autonomously or with human feedback, including tasks that require using tools via code.

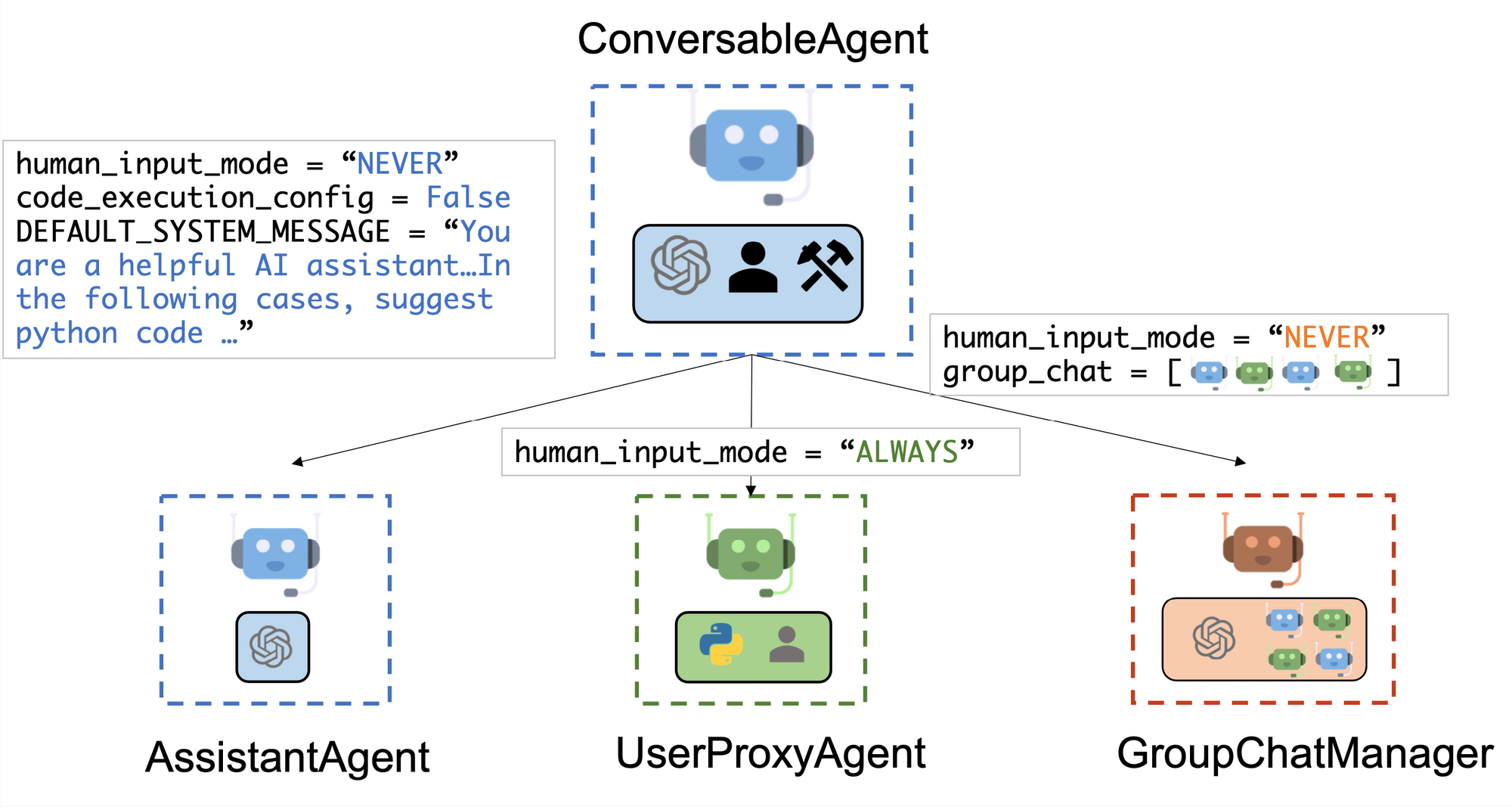

Below we can see the built-in agents in AutoGen:

Here's an overview of how this works:

ConversableAgentis a generic class for communication-enabled agents to jointly complete tasks.- There are two subclasses:

AssistantAgentandUserProxyAgent.AssistantAgent:- AI assistant, writes code for task descriptions received.

- Uses LLMs, like GPT-4, for code generation. Behavior and LLM configuration is adjustable.

- Can receive execution results, suggest fixes.

UserProxyAgent:- Human proxy, solicits human input for responses.

- Can execute code, toggleable via

code_execution_config. - LLM-based response disabled by default, enabled and configured via

llm_config.

- The

ConversableAgentauto-reply feature facilitates autonomous agent communication with human intervention option. - We can also extend this by registering reply functions with the

register_reply()method.

Example of a Multi-Agent Conversation

Ok now let's look at a basic two-agent conversation with the following code, which asks:

What date is today? Which big tech stock has the largest year-to-date gain this year? How much is the gain?

from autogen import AssistantAgent, UserProxyAgent

# create an AssistantAgent instance named "assistant"

assistant = AssistantAgent(name="assistant")

# create a UserProxyAgent instance named "user_proxy"

user_proxy = UserProxyAgent(name="user_proxy")# the assistant receives a message from the user, which contains the task description

user_proxy.initiate_chat(

assistant,

message="""What date is today? Which big tech stock has the largest year-to-date gain this year? How much is the gain?""",

)In this example, we have two agents: an AssistantAgent and a UserProxyAgent. The UserProxyAgent initiates a conversation with the AssistantAgent by sending a message containing the task description.

The AssistantAgent processes this message, splits it into actionable tasks, executes those tasks, and communicates back the results to the UserProxyAgent either for feedback or to execute the code.

From this example, I can see it got that GPT-4 still thinks Meta's stock ticker is FB, so I can use the feedback mechanism to let it know about the ticker change to META. With that feedback, it was able to correctly surmise that

"According to the data retrieved from Yahoo Finance through the yfinance library, Meta Platforms Inc, is the tech stock with the largest Year-to-Date (YTD) gain of 144.43% as of the current date (at the time of writing)."

Even though this is a relatively simple example, I can already see it's able to handle errors much more gracefully and hasn't been getting caught in infinite loops like AutoGPT tends to.

Along with the ease of providing human feedback to multiple agents, this is definitely going to be an interesting tool to work with.

Now, before we conclude let's look a a few use cases of what you can do with AutoGen and AI agents in general.

Use Cases of AI Agents & AutoGen

There's no question that AI agents and AutoGen's framework for developing LLM applications through multi-agent conversations lends itself to a wide range of practical applications.

You can find several notebook examples on what you can automate with AutoGen here, but here's an overview of potential use case:

- Automated Task Coordination: Enables multiple agents to collaboratively tackle tasks through automated chat, streamlining code generation, execution, and debugging processes.

- Human-Machine Collaboration: Incorporates human feedback alongside automated agents to solve complex tasks, blending human expertise with automated efficiency.

- Tool Functionality Extension: Uses provided tools as functions within the chat, enhancing the capability of agents in problem-solving.

- Code Generation & Planning: Automates the process of code generation and strategic planning to solve tasks, reducing the manual effort required.

- Automated Data Visualization: Employs group chat for generating visual data representations, aiding in clearer data interpretation.

- Continual Learning: Enables automated continual learning from new data, promoting agent adaptability.

- Skill Teaching & Reuse: Allows for teaching new skills to agents and reusing them in future tasks through automated chat.

- Retrieval-Augmented Solutions: Combines code generation with question answering using retrieval-augmented agents, enhancing solution comprehensiveness.

- Hyperparameter Optimization: Introduces EcoOptiGen for cost-effective hyperparameter tuning of Large Language Models, optimizing performance for specific tasks like code generation and math problem solving.

As you can see, the list of possibilities with AI agents is quite extensive, so in future tutorials we'll work through several of these examples.

Summary: Getting Started with AutoGen

As I've been experimenting with AI agents, I quickly realized that previous open source agent frameworks didn't seem quite ready for production use, but with AutoGen I think the tides may have turned. Given that the framework is also backed by Microsoft, this makes it even more promising.

In the coming weeks, we'll continue to explore what's possible with AutoGen and see if we can start building more AI agents for more practical use cases. In the meantime, you can check out other tutorials on autonomous AI agents below:

- Getting Started with Auto-GPT: an Autonomous GPT-4 Experiment

- Getting Started with AutoGPT: MLQ Academy

- Adding Long Term Memory to AutoGPT with Pinecone

- AutoGPT & LangChain: Building an Automated Research Assistant

- AutoGPT & LangChain: Building an Automated Research Assistant - MLQ Academy

Resources

- AutoGen Documentation

- AutoGen Paper:Enabling Next-Gen LLM Applications via Multi-Agent Conversation Framework